量子机器学习示例¶

我们这里使用VQNet实现了多个量子机器学习示例。

带参量子线路在分类任务的应用¶

1. QVC示例¶

这个例子使用VQNet实现了论文 Circuit-centric quantum classifiers 中可变量子线路进行二分类任务。 该例子用来判断一个二进制数是奇数还是偶数。通过将二进制数编码到量子比特上,通过优化线路中的可变参数,使得该线路z方向测量值可以指示该输入为奇数还是偶数。

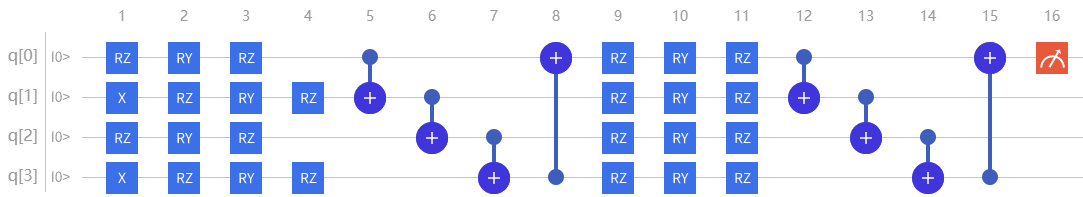

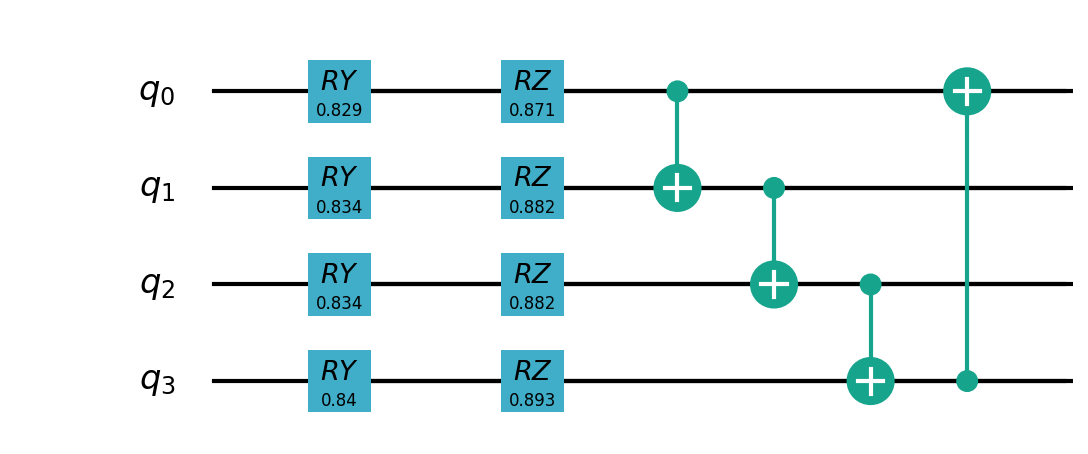

量子线路¶

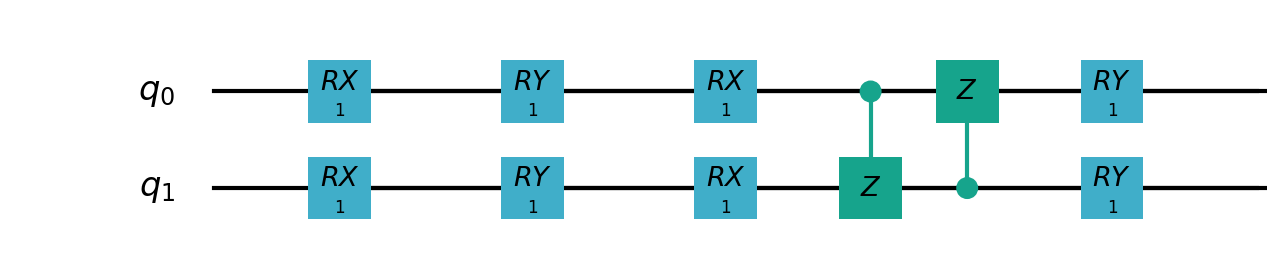

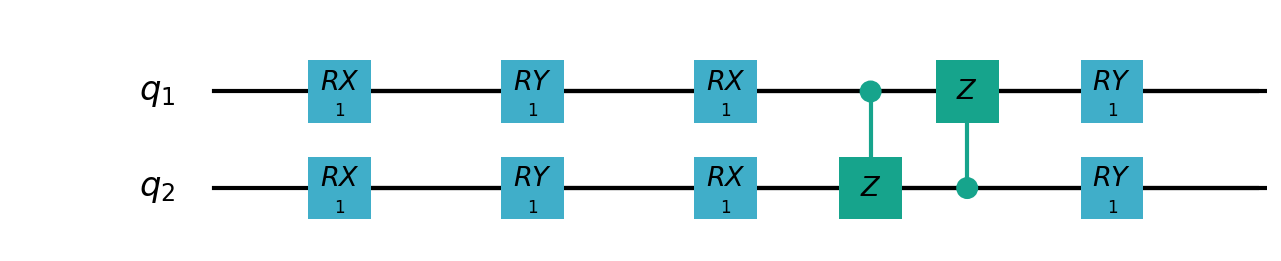

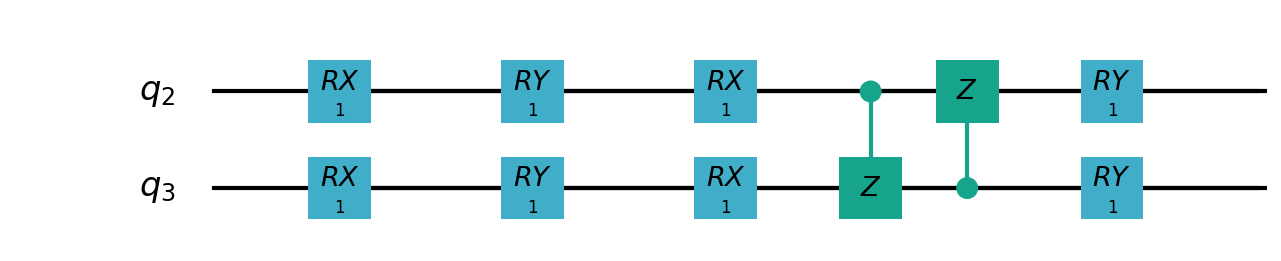

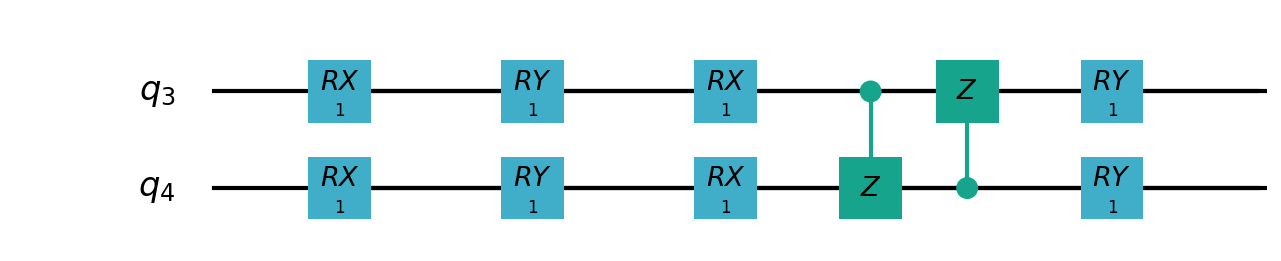

变分量子线路通常定义一个子线路,这是一种基本的电路架构,可以通过重复层构建复杂变分电路。

我们的电路层由多个旋转逻辑门以及将每个量子位与其相邻的量子位纠缠在一起的 CNOT 逻辑门组成。

我们还需要一个线路将经典数据编码到量子态上,使得线路测量的输出与输入有关联。

本例中,我们把二进制输入编码到对应顺序的量子比特上。例如输入数据1101被编码到4个量子比特。

import pyqpanda as pq

def qvc_circuits(input,weights,qlist,clist,machine):

def get_cnot(nqubits):

cir = pq.QCircuit()

for i in range(len(nqubits)-1):

cir.insert(pq.CNOT(nqubits[i],nqubits[i+1]))

cir.insert(pq.CNOT(nqubits[len(nqubits)-1],nqubits[0]))

return cir

def build_circult(weights, xx, nqubits):

def Rot(weights_j, qubits):

circult = pq.QCircuit()

circult.insert(pq.RZ(qubits, weights_j[0]))

circult.insert(pq.RY(qubits, weights_j[1]))

circult.insert(pq.RZ(qubits, weights_j[2]))

return circult

def basisstate():

circult = pq.QCircuit()

for i in range(len(nqubits)):

if xx[i] == 1:

circult.insert(pq.X(nqubits[i]))

return circult

circult = pq.QCircuit()

circult.insert(basisstate())

for i in range(weights.shape[0]):

weights_i = weights[i,:,:]

for j in range(len(nqubits)):

weights_j = weights_i[j]

circult.insert(Rot(weights_j,nqubits[j]))

cnots = get_cnot(nqubits)

circult.insert(cnots)

circult.insert(pq.Z(nqubits[0]))

prog = pq.QProg()

prog.insert(circult)

return prog

weights = weights.reshape([2,4,3])

prog = build_circult(weights,input,qlist)

prob = machine.prob_run_dict(prog, qlist[0], -1)

prob = list(prob.values())

return prob

模型构建¶

我们已经定义了可变量子线路 qvc_circuits 。我们希望将其用于我们VQNet的自动微分逻辑中,并使用VQNet的优化算法进行模型训练。我们定义了一个 Model 类,该类继承于抽象类 Module。

Model中使用 QuantumLayer 类这个可进行自动微分的量子计算层。qvc_circuits 为我们希望运行的量子线路,24 为所有需要训练的量子线路参数的个数,”cpu” 表示这里使用 pyQPanda 的 全振幅模拟器,4表示需要申请4个量子比特。

在 forward() 函数中,用户定义了模型前向运行的逻辑。

from pyvqnet.nn.module import Module

from pyvqnet.optim.sgd import SGD

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.data import data_generator as dataloader

import pyqpanda as pq

from pyvqnet.qnn.quantumlayer import QuantumLayer

from pyqpanda import *

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.qvc = QuantumLayer(qvc_circuits,24,"cpu",4)

def forward(self, x):

return self.qvc(x)

模型训练和测试¶

我们使用预先生成的随机二进制数以及其奇数偶数标签。其中数据如下:

import numpy as np

import os

qvc_train_data = [0,1,0,0,1,

0, 1, 0, 1, 0,

0, 1, 1, 0, 0,

0, 1, 1, 1, 1,

1, 0, 0, 0, 1,

1, 0, 0, 1, 0,

1, 0, 1, 0, 0,

1, 0, 1, 1, 1,

1, 1, 0, 0, 0,

1, 1, 0, 1, 1,

1, 1, 1, 0, 1,

1, 1, 1, 1, 0]

qvc_test_data= [0, 0, 0, 0, 0,

0, 0, 0, 1, 1,

0, 0, 1, 0, 1,

0, 0, 1, 1, 0]

def get_data(dataset_str):

if dataset_str == "train":

datasets = np.array(qvc_train_data)

else:

datasets = np.array(qvc_test_data)

datasets = datasets.reshape([-1,5])

data = datasets[:,:-1]

label = datasets[:,-1].astype(int)

label = np.eye(2)[label].reshape(-1,2)

return data, label

接着就可以按照一般神经网络训练的模式进行模型前传,损失函数计算,反向运算,优化器运算,直到迭代次数达到预设值。 其所使用的训练数据是上述生成的qvc_train_data,测试数据为qvc_test_data。

def get_accuary(result,label):

result,label = np.array(result.data), np.array(label.data)

score = np.sum(np.argmax(result,axis=1)==np.argmax(label,1))

return score

#示例化Model类

model = Model()

#定义优化器,此处需要传入model.parameters()表示模型中所有待训练参数,lr为学习率

optimizer = SGD(model.parameters(),lr =0.1)

#训练时候可以修改批处理的样本数

batch_size = 3

#训练最大迭代次数

epoch = 20

#模型损失函数

loss = CategoricalCrossEntropy()

model.train()

datas,labels = get_data("train")

for i in range(epoch):

count=0

sum_loss = 0

accuary = 0

t = 0

for data,label in dataloader(datas,labels,batch_size,False):

optimizer.zero_grad()

data,label = QTensor(data), QTensor(label)

result = model(data)

loss_b = loss(label,result)

loss_b.backward()

optimizer._step()

sum_loss += loss_b.item()

count+=batch_size

accuary += get_accuary(result,label)

t = t + 1

print(f"epoch:{i}, #### loss:{sum_loss/count} #####accuray:{accuary/count}")

model.eval()

count = 0

test_data,test_label = get_data("test")

test_batch_size = 1

accuary = 0

sum_loss = 0

for testd,testl in dataloader(test_data,test_label,test_batch_size):

testd = QTensor(testd)

test_result = model(testd)

test_loss = loss(testl,test_result)

sum_loss += test_loss

count+=test_batch_size

accuary += get_accuary(test_result,testl)

print(f"test:--------------->loss:{sum_loss/count} #####accuray:{accuary/count}")

epoch:0, #### loss:0.20194714764753977 #####accuray:0.6666666666666666

epoch:1, #### loss:0.19724808633327484 #####accuray:0.8333333333333334

epoch:2, #### loss:0.19266503552595773 #####accuray:1.0

epoch:3, #### loss:0.18812804917494455 #####accuray:1.0

epoch:4, #### loss:0.1835678368806839 #####accuray:1.0

epoch:5, #### loss:0.1789149840672811 #####accuray:1.0

epoch:6, #### loss:0.17410411685705185 #####accuray:1.0

epoch:7, #### loss:0.16908332953850427 #####accuray:1.0

epoch:8, #### loss:0.16382796317338943 #####accuray:1.0

epoch:9, #### loss:0.15835540741682053 #####accuray:1.0

epoch:10, #### loss:0.15273457020521164 #####accuray:1.0

epoch:11, #### loss:0.14708336691061655 #####accuray:1.0

epoch:12, #### loss:0.14155150949954987 #####accuray:1.0

epoch:13, #### loss:0.1362930883963903 #####accuray:1.0

epoch:14, #### loss:0.1314386005202929 #####accuray:1.0

epoch:15, #### loss:0.12707658857107162 #####accuray:1.0

epoch:16, #### loss:0.123248390853405 #####accuray:1.0

epoch:17, #### loss:0.11995399743318558 #####accuray:1.0

epoch:18, #### loss:0.1171633576353391 #####accuray:1.0

epoch:19, #### loss:0.11482855677604675 #####accuray:1.0

[0.3412148654]

test:--------------->loss:QTensor(0.3412148654, requires_grad=True) #####accuray:1.0

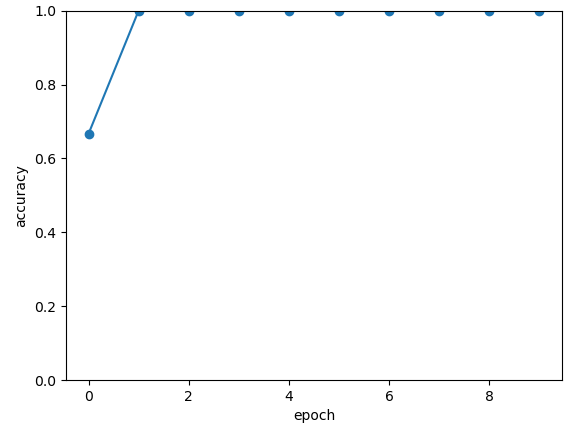

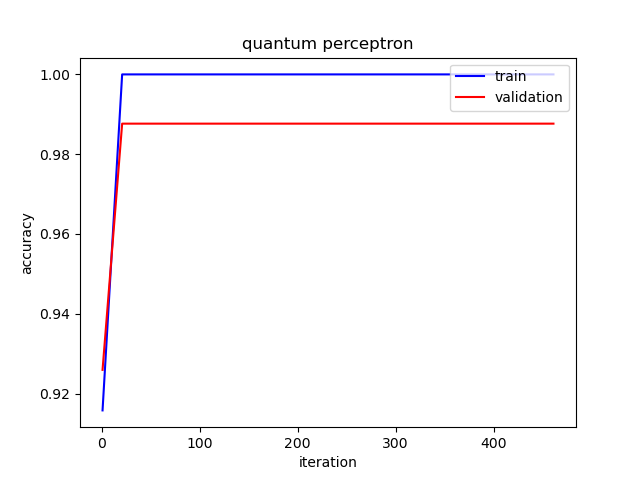

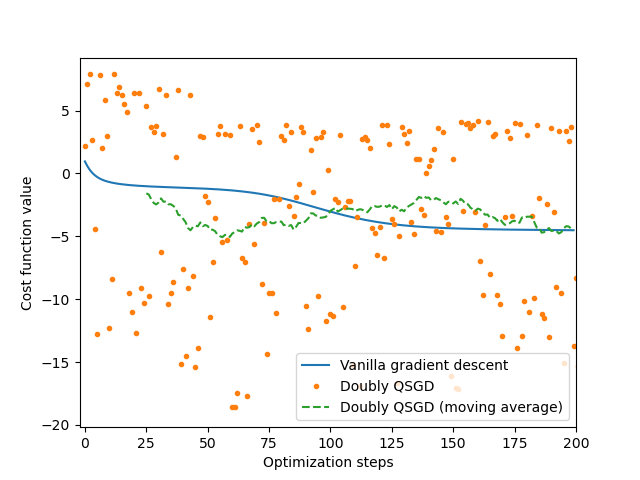

模型在测试数据上准确率变化情况:

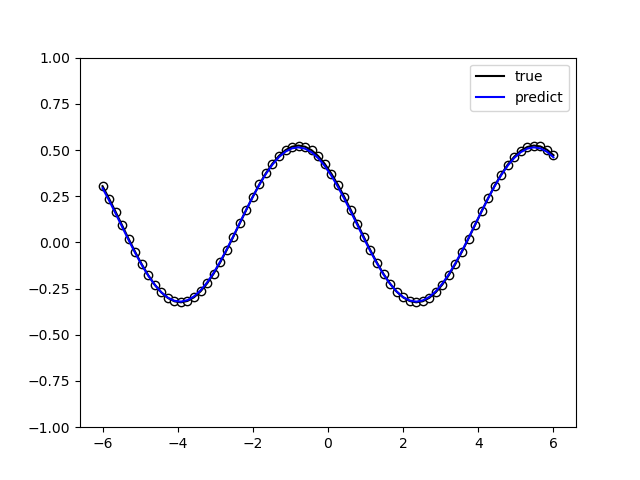

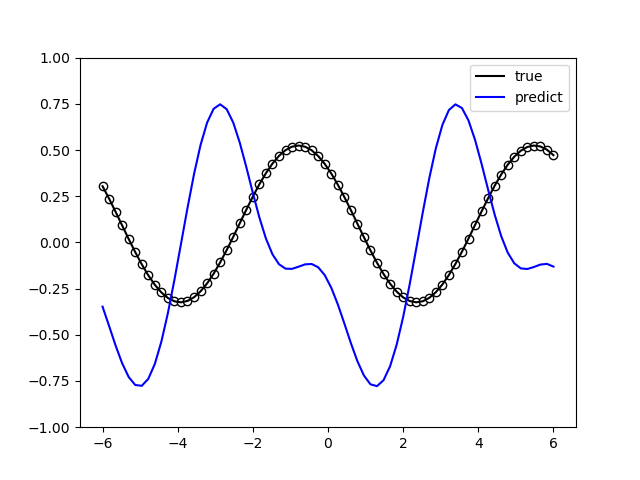

2. Data Re-uploading模型¶

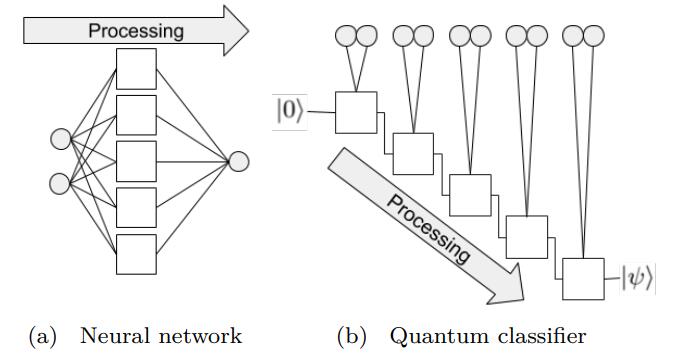

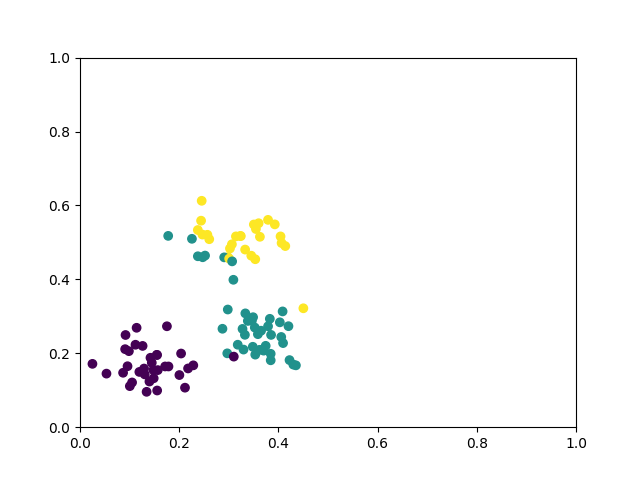

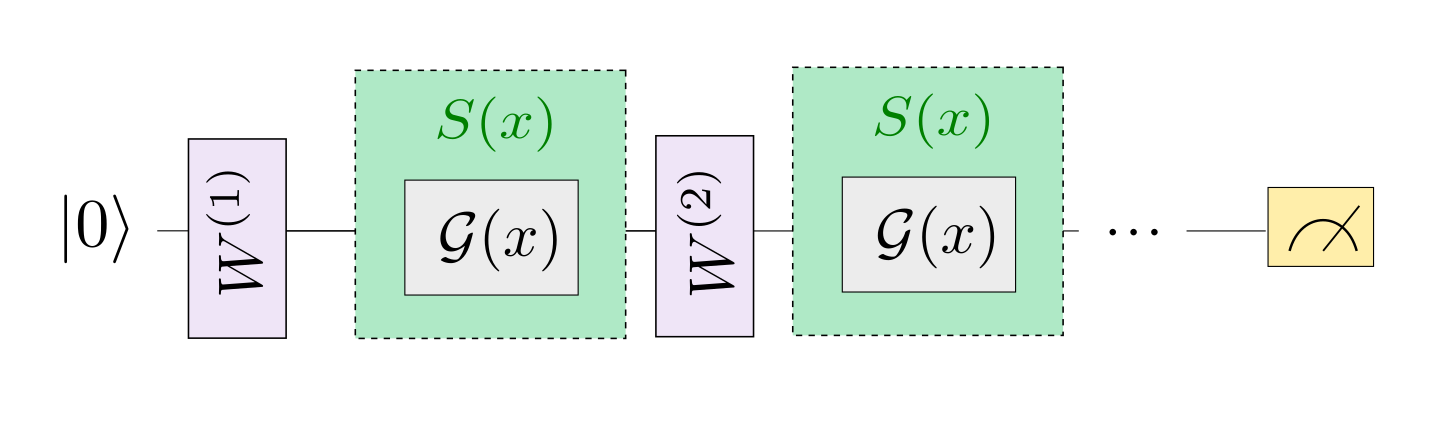

在神经网络中,每一个神经元都接受来自上层所有神经元的信息(图a)。与之相对的,单比特量子分类器接受上一个的信息处理单元和输入(图b)。 通俗地来说,对于传统的量子线路来说,当数据上传完成,可以直接通过若干幺正变换 \(U(\theta_1,\theta_2,\theta_3)\) 直接得到结果。 但是在量子数据重上传(Quantum Data Re-upLoading,QDRL)任务中,数据在幺正变换之前需要进行重新上传操作。

QDRL与经典神经网络原理图对比

"""

Parameterized quantum circuit for Quantum Data Re-upLoading

"""

import sys

sys.path.insert(0, "../")

import numpy as np

from pyvqnet.nn.linear import Linear

from pyvqnet.qnn.qdrl.vqnet_model import vmodel

from pyvqnet.optim import sgd

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.nn.module import Module

import matplotlib.pyplot as plt

import matplotlib

from pyvqnet.data import data_generator as get_minibatch_data

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

np.random.seed(42)

num_layers = 3

params = np.random.uniform(size=(num_layers, 3))

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.pqc = vmodel(params.shape)

self.fc2 = Linear(2, 2)

def forward(self, x):

x = self.pqc(x)

return x

def circle(samples: int, reps=np.sqrt(1 / 2)):

data_x, data_y = [], []

for _ in range(samples):

x = np.random.rand(2)

y = [0, 1]

if np.linalg.norm(x) < reps:

y = [1, 0]

data_x.append(x)

data_y.append(y)

return np.array(data_x), np.array(data_y)

def plot_data(x, y, fig=None, ax=None):

if fig is None:

fig, ax = plt.subplots(1, 1, figsize=(5, 5))

reds = y == 0

blues = y == 1

ax.scatter(x[reds, 0], x[reds, 1], c="red", s=20, edgecolor="k")

ax.scatter(x[blues, 0], x[blues, 1], c="blue", s=20, edgecolor="k")

ax.set_xlabel("$x_1$")

ax.set_ylabel("$x_2$")

def get_score(pred, label):

pred, label = np.array(pred.data), np.array(label.data)

score = np.sum(np.argmax(pred, axis=1) == np.argmax(label, 1))

return score

model = Model()

optimizer = sgd.SGD(model.parameters(), lr=1)

def train():

"""

Main function for train qdrl model

"""

batch_size = 5

model.train()

x_train, y_train = circle(500)

x_train = np.hstack((x_train, np.ones((x_train.shape[0], 1)))) # 500*3

epoch = 10

print("start training...........")

for i in range(epoch):

accuracy = 0

count = 0

loss = 0

for data, label in get_minibatch_data(x_train, y_train, batch_size):

optimizer.zero_grad()

data, label = QTensor(data), QTensor(label)

output = model(data)

loss_fun = CategoricalCrossEntropy()

losss = loss_fun(label, output)

losss.backward()

optimizer._step()

accuracy += get_score(output, label)

loss += losss.item()

# print(f"epoch:{i}, train_accuracy:{accuracy}")

# print(f"epoch:{i}, train_loss:{losss}")

count += batch_size

print(f"epoch:{i}, train_accuracy_for_each_batch:{accuracy/count}")

print(f"epoch:{i}, train_loss_for_each_batch:{loss/count}")

def test():

batch_size = 5

model.eval()

print("start eval...................")

x_test, y_test = circle(500)

test_accuracy = 0

count = 0

x_test = np.hstack((x_test, np.ones((x_test.shape[0], 1))))

for test_data, test_label in get_minibatch_data(x_test, y_test,

batch_size):

test_data, test_label = QTensor(test_data), QTensor(test_label)

output = model(test_data)

test_accuracy += get_score(output, test_label)

count += batch_size

print(f"test_accuracy:{test_accuracy/count}")

if __name__ == "__main__":

train()

test()

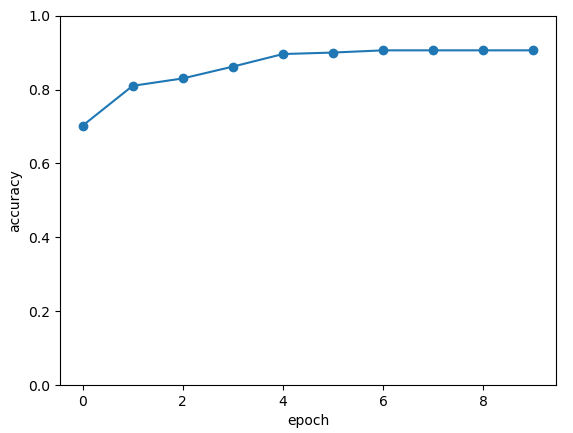

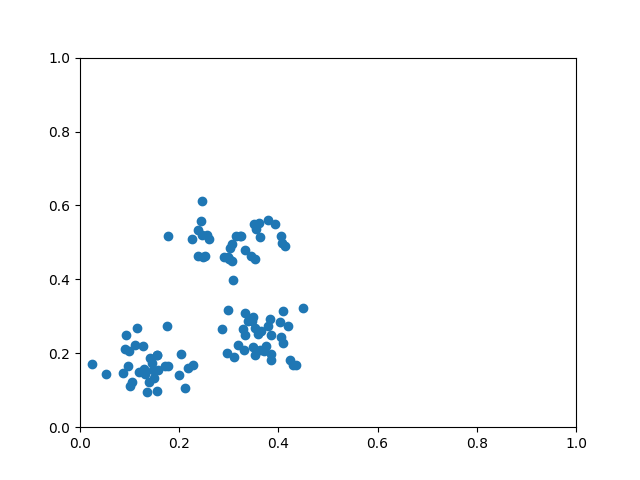

QDRL在测试数据上准确率变化情况:

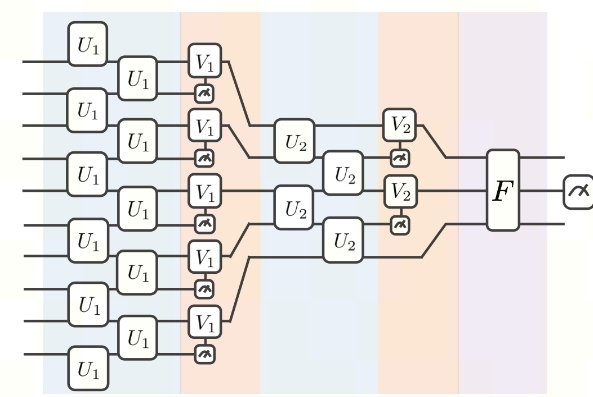

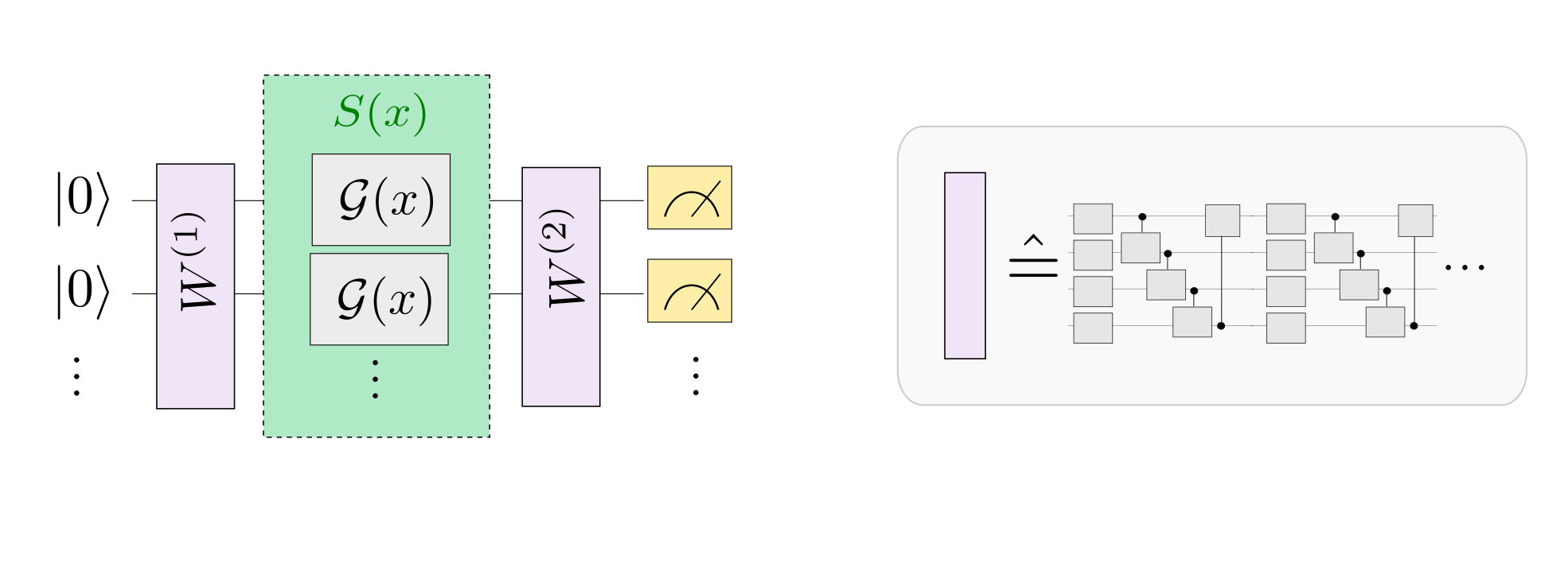

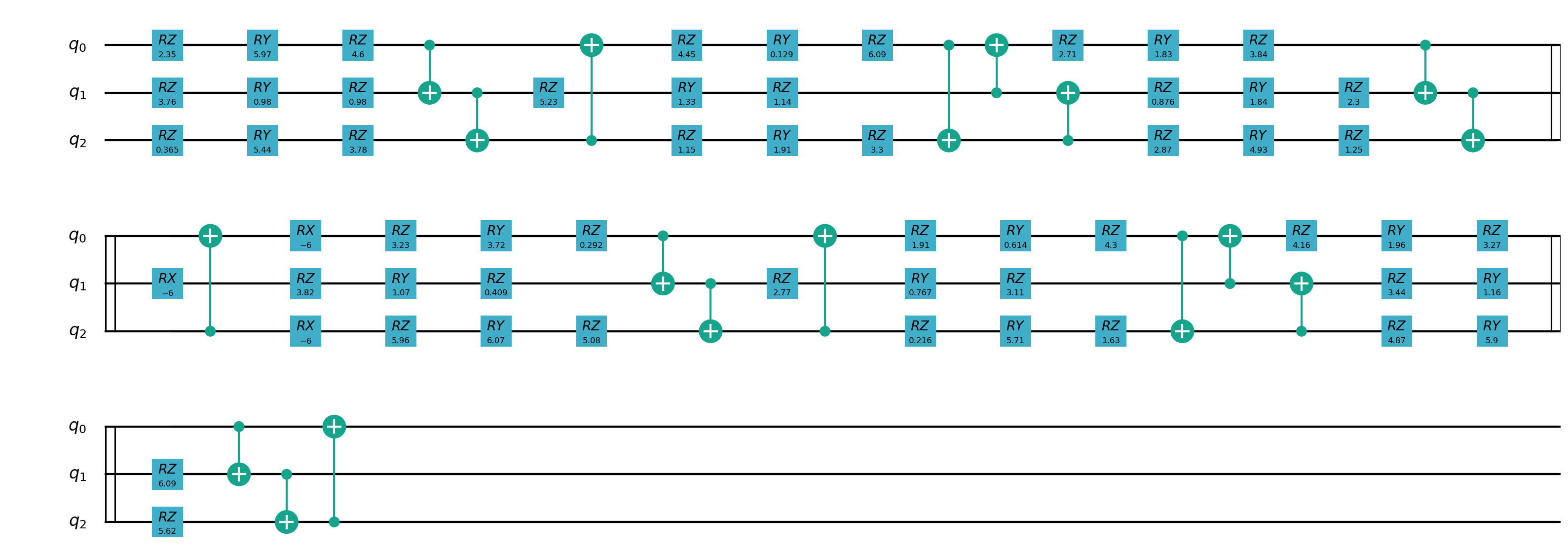

3. VSQL: Variational Shadow Quantum Learning for Classification模型¶

使用可变量子线路构建2分类模型,在与相似参数精度的神经网络对比分类精度,两者精度相近。而量子线路的参数量远小于经典神经网络。 算法基于论文:Variational Shadow Quantum Learning for Classification Model 复现。

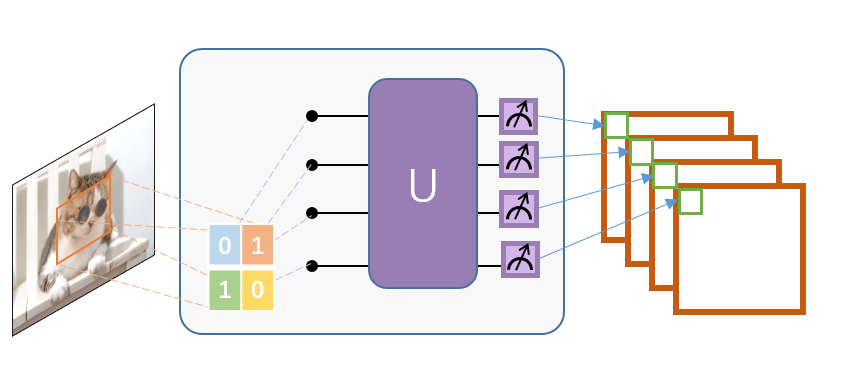

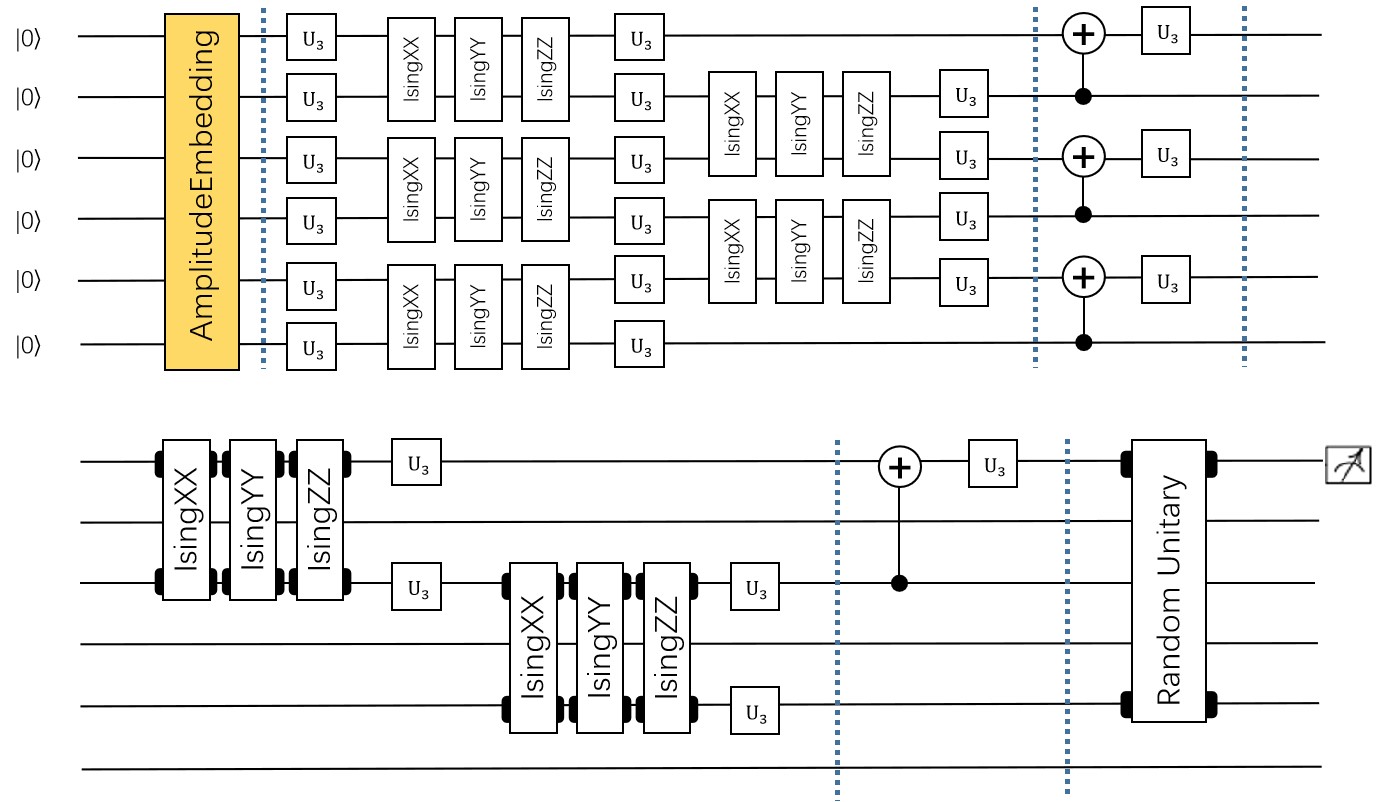

VSQL量子整体模型如下:

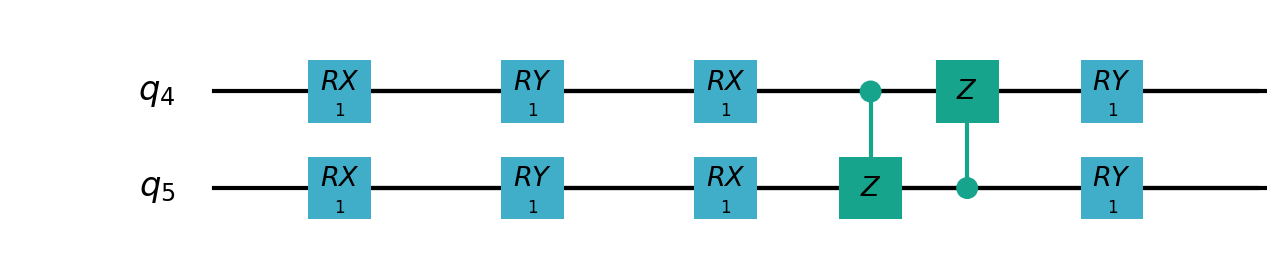

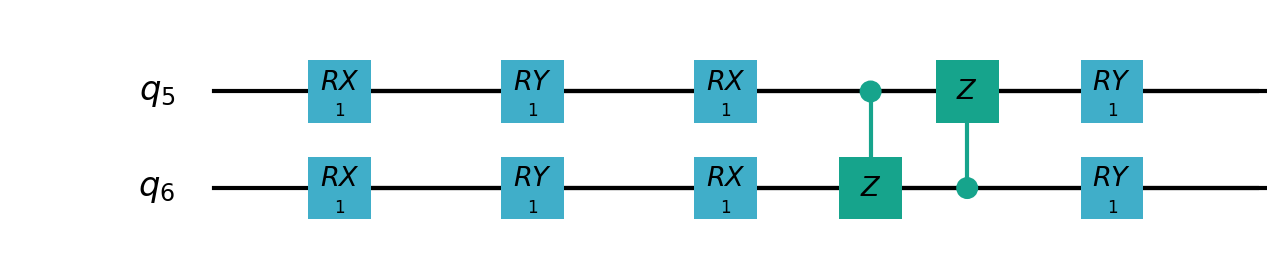

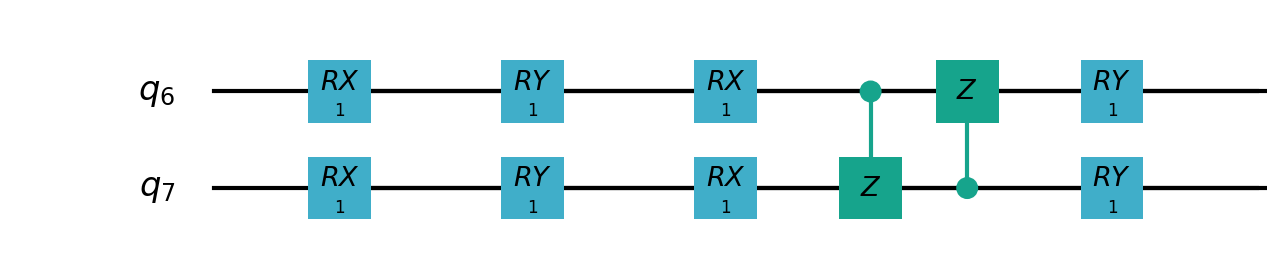

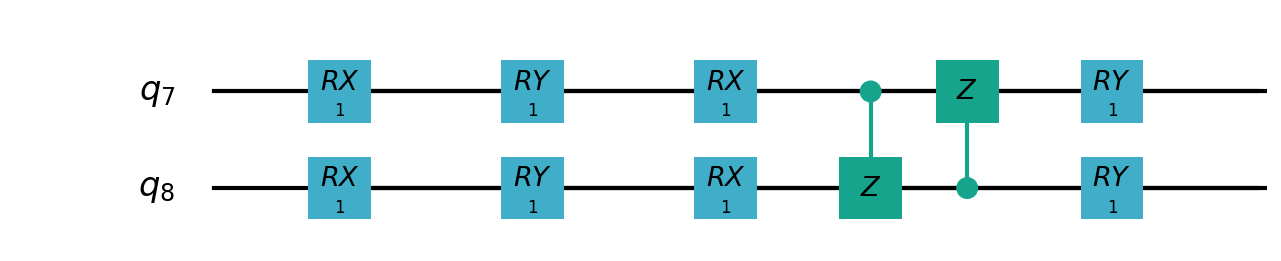

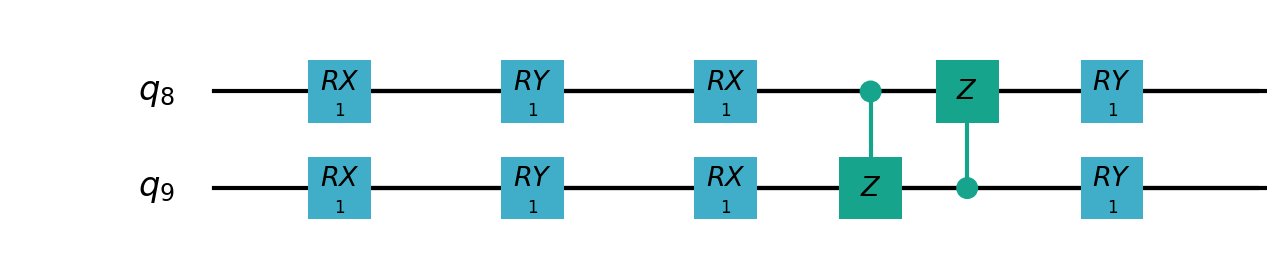

VSQL中各个量子比特上的局部量子线路图如下:

"""

Parameterized quantum circuit for VSQL

"""

import sys

sys.path.insert(0, "../")

import os

import os.path

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.measure import expval

from pyvqnet.qnn.quantumlayer import QuantumLayer

from pyvqnet.qnn.template import AmplitudeEmbeddingCircuit

from pyvqnet.nn.linear import Linear

import numpy as np

import pyqpanda as pq

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = "http://yann.lecun.com/exdb/mnist/"

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download function for mnist dataset file

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def circuits_of_vsql(x, weights, qlist, clist, machine): #pylint:disable=unused-argument

"""

VSQL model of quantum circuits

"""

weights = weights.reshape([depth + 1, 3, n_qsc])

def subcir(weights, qlist, depth, n_qsc, n_start): #pylint:disable=redefined-outer-name

cir = pq.QCircuit()

for i in range(n_qsc):

cir.insert(pq.RX(qlist[n_start + i], weights[0][0][i]))

cir.insert(pq.RY(qlist[n_start + i], weights[0][1][i]))

cir.insert(pq.RX(qlist[n_start + i], weights[0][2][i]))

for repeat in range(1, depth + 1):

for i in range(n_qsc - 1):

cir.insert(pq.CNOT(qlist[n_start + i], qlist[n_start + i + 1]))

cir.insert(pq.CNOT(qlist[n_start + n_qsc - 1], qlist[n_start]))

for i in range(n_qsc):

cir.insert(pq.RY(qlist[n_start + i], weights[repeat][1][i]))

return cir

def get_pauli_str(n_start, n_qsc): #pylint:disable=redefined-outer-name

pauli_str = ",".join("X" + str(i)

for i in range(n_start, n_start + n_qsc))

return {pauli_str: 1.0}

f_i = []

origin_in = AmplitudeEmbeddingCircuit(x, qlist)

for st in range(n - n_qsc + 1):

psd = get_pauli_str(st, n_qsc)

cir = pq.QCircuit()

cir.insert(origin_in)

cir.insert(subcir(weights, qlist, depth, n_qsc, st))

prog = pq.QProg()

prog.insert(cir)

f_ij = expval(machine, prog, psd, qlist)

f_i.append(f_ij)

f_i = np.array(f_i)

return f_i

#GLOBAL VAR

n = 10

n_qsc = 2

depth = 1

class QModel(Module):

"""

Model of VSQL

"""

def __init__(self):

super().__init__()

self.vq = QuantumLayer(circuits_of_vsql, (depth + 1) * 3 * n_qsc,

"cpu", 10)

self.fc = Linear(n - n_qsc + 1, 2)

def forward(self, x):

x = self.vq(x)

x = self.fc(x)

return x

class Model(Module):

def __init__(self):

super().__init__()

self.fc1 = Linear(input_channels=28 * 28, output_channels=2)

def forward(self, x):

x = tensor.flatten(x, 1)

x = self.fc1(x)

return x

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols), dtype=np.float32)

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def run_vsql():

"""

VQSL MODEL

"""

digits = [0, 1]

x_train, y_train = load_mnist("training_data", digits)

x_train = x_train / 255

y_train = y_train.reshape(-1, 1)

y_train = np.eye(len(digits))[y_train].reshape(-1, len(digits)).astype(

np.int64)

x_test, y_test = load_mnist("testing_data", digits)

x_test = x_test / 255

y_test = y_test.reshape(-1, 1)

y_test = np.eye(len(digits))[y_test].reshape(-1,

len(digits)).astype(np.int64)

x_train_list = []

x_test_list = []

for i in range(x_train.shape[0]):

x_train_list.append(

np.pad(x_train[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_train = np.array(x_train_list)

for i in range(x_test.shape[0]):

x_test_list.append(

np.pad(x_test[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_test = np.array(x_test_list)

x_train = x_train[:500]

y_train = y_train[:500]

x_test = x_test[:100]

y_test = y_test[:100]

print("model start")

model = QModel()

optimizer = Adam(model.parameters(), lr=0.1)

model.train()

result_file = open("./result/vqslrlt.txt", "w")

for epoch in range(1, 3):

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

correct = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

optimizer.zero_grad()

try:

x = x.reshape(batch_size, 1024)

except: #pylint:disable=bare-except

x = x.reshape(-1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

loss.backward()

optimizer._step()

full_loss += loss.item()

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

print(f" n_loss {n_loss} Train Accuracy: {correct/n_loss} ")

print(f"Train Accuracy: {correct/n_loss} ")

print(f"Epoch: {epoch}, Loss: {full_loss / n_loss}")

result_file.write(f"{epoch}\t{full_loss / n_loss}\t{correct/n_loss}\t")

# Evaluation

model.eval()

print("eval")

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test,

y_test,

batch_size=batch_size,

shuffle=True):

x = x.reshape(1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

full_loss += loss.item()

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

n_eval += 1

n_loss += 1

print(f"Eval Accuracy: {correct/n_eval}")

result_file.write(f"{full_loss / n_loss}\t{correct/n_eval}\n")

result_file.close()

del model

print("\ndone vqsl\n")

if __name__ == "__main__":

run_vsql()

VSQL在测试数据上准确率变化情况:

4.Quanvolution进行图像分类¶

在此示例中,我们实现了量子卷积神经网络,这是一种最初在论文 Quanvolutional Neural Networks: Powering Image Recognition with Quantum Circuits 中介绍的方法。

类似经典卷积,Quanvolution有以下步骤:

输入图像的一小块区域,在我们的例子中是 2×2方形经典数据,嵌入到量子电路中。

在此示例中,这是通过将参数化旋转逻辑门应用于在基态中初始化的量子位来实现的。此处的卷积核由参考文献中提出的随机电路生成变分线路。

最后测量量子系统,获得经典期望值列表。

类似于经典的卷积层,每个期望值都映射到单个输出像素的不同通道。

在不同区域重复相同的过程,可以扫描完整的输入图像,生成一个输出对象,该对象将被构造为多通道图像。

为了进行分类任务,本例在Quanvolution获取测量值后,使用经典全连接层 Linear 进行分类任务。

与经典卷积的主要区别在于,Quanvolution可以生成高度复杂的内核,其计算至少在原则上是经典难处理的。

Mnist数据集定义

import os

import os.path

import struct

import gzip

import sys

sys.path.insert(0, "../")

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import NLL_Loss

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.measure import expval

from pyvqnet.nn.linear import Linear

import numpy as np

from pyvqnet.qnn.qcnn import Quanvolution

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = "http://yann.lecun.com/exdb/mnist/"

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download function for mnist dataset file

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def load_mnist(dataset="training_data", digits=np.arange(10), path="./"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols))

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

模型定义与运行函数定义

class QModel(Module):

def __init__(self):

super().__init__()

self.vq = Quanvolution([4, 2], (2, 2))

self.fc = Linear(4 * 14 * 14, 10)

def forward(self, x):

x = self.vq(x)

x = tensor.flatten(x, 1)

x = self.fc(x)

x = tensor.log_softmax(x)

return x

def run_quanvolution():

digit = 10

x_train, y_train = load_mnist("training_data", digits=np.arange(digit))

x_train = x_train / 255

y_train = y_train.flatten()

x_test, y_test = load_mnist("testing_data", digits=np.arange(digit))

x_test = x_test / 255

y_test = y_test.flatten()

x_train = x_train[:500]

y_train = y_train[:500]

x_test = x_test[:100]

y_test = y_test[:100]

print("model start")

model = QModel()

optimizer = Adam(model.parameters(), lr=5e-3)

model.train()

result_file = open("quanvolution.txt", "w")

cceloss = NLL_Loss()

N_EPOCH = 15

for epoch in range(1, N_EPOCH):

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 10

correct = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

optimizer.zero_grad()

try:

x = x.reshape(batch_size, 1, 28, 28)

except: #pylint:disable=bare-except

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = cceloss(y, output)

print(f"loss {loss}")

loss.backward()

optimizer._step()

full_loss += loss.item()

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y

correct += sum(mask)

print(f"correct {correct}")

print(f"Train Accuracy: {correct/n_loss}%")

print(f"Epoch: {epoch}, Loss: {full_loss / n_loss}")

result_file.write(f"{epoch}\t{full_loss / n_loss}\t{correct/n_loss}\t")

# Evaluation

model.eval()

print("eval")

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test,

y_test,

batch_size=batch_size,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = cceloss(y, output)

full_loss += loss.item()

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y

correct += sum(mask)

n_eval += 1

n_loss += 1

print(f"Eval Accuracy: {correct/n_eval}")

result_file.write(f"{full_loss / n_loss}\t{correct/n_eval}\n")

result_file.close()

del model

print("\ndone\n")

if __name__ == "__main__":

run_quanvolution()

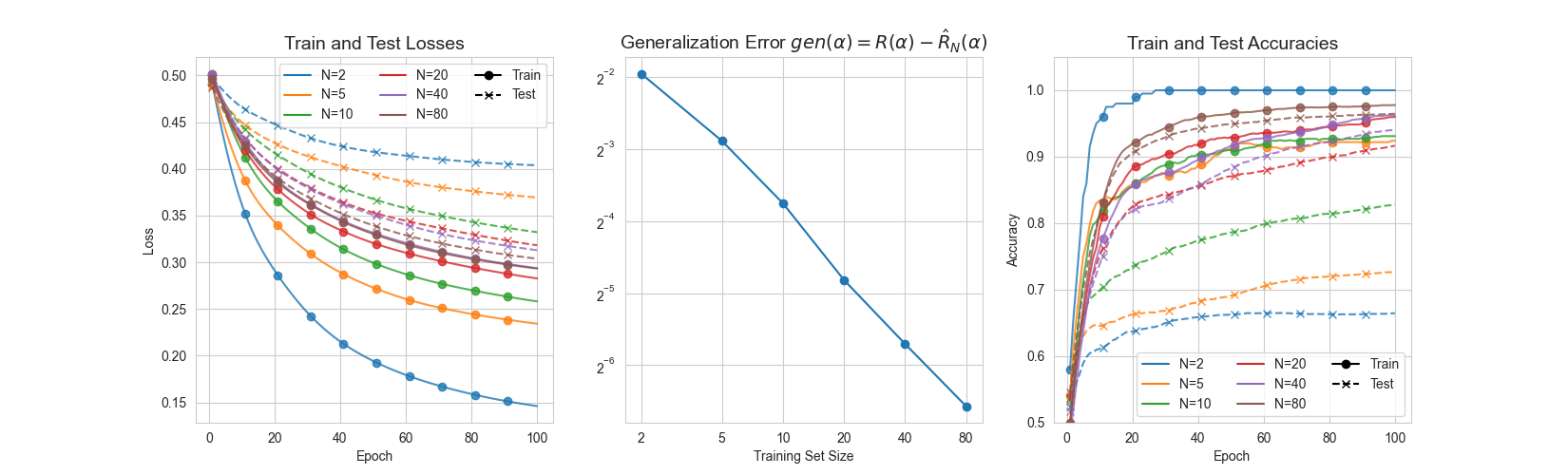

训练集、验证集损失,训练集、验证集分类准确率随Epoch 变换情况。

# epoch train_loss train_accuracy eval_loss eval_accuracy

# 1 0.2488900272846222 0.232 1.7297331787645818 0.39

# 2 0.12281704187393189 0.646 1.201728610806167 0.61

# 3 0.08001763761043548 0.772 0.8947569639235735 0.73

# 4 0.06211201059818268 0.83 0.777864265316166 0.74

# 5 0.052190632969141004 0.858 0.7291000287979841 0.76

# 6 0.04542196464538574 0.87 0.6764470228599384 0.8

# 7 0.04029472427070141 0.896 0.6153804161818698 0.79

# 8 0.03600500610470772 0.902 0.5644993982824963 0.81

# 9 0.03230033944547176 0.916 0.528938240573043 0.81

# 10 0.02912954458594322 0.93 0.5058713140769396 0.83

# 11 0.026443827204406262 0.936 0.49064547760412097 0.83

# 12 0.024144304402172564 0.942 0.4800815625616815 0.82

# 13 0.022141477409750223 0.952 0.4724775951183983 0.83

# 14 0.020372112181037665 0.956 0.46692863543197743 0.83

量子自编码器模型¶

1.量子自编码器¶

经典的自动编码器是一种神经网络,可以在高维空间学习数据的高效低维表示。自动编码器的任务是,给定一个输入x,将x映射到一个低维点y,这样x就可以从y中恢复。 可以选择底层自动编码器网络的结构,以便在较小的维度上表示数据,从而有效地压缩输入。受这一想法的启发,量子自动编码器的模型来对量子数据执行类似的任务。 量子自动编码器被训练来压缩量子态的特定数据集,而经典的压缩算法无法使用。量子自动编码器的参数采用经典优化算法进行训练。 我们展示了一个简单的可编程线路的例子,它可以被训练成一个高效的自动编码器。我们在量子模拟的背景下应用我们的模型来压缩哈伯德模型和分子哈密顿量的基态。 该例子参考自 Quantum autoencoders for efficient compression of quantum data .

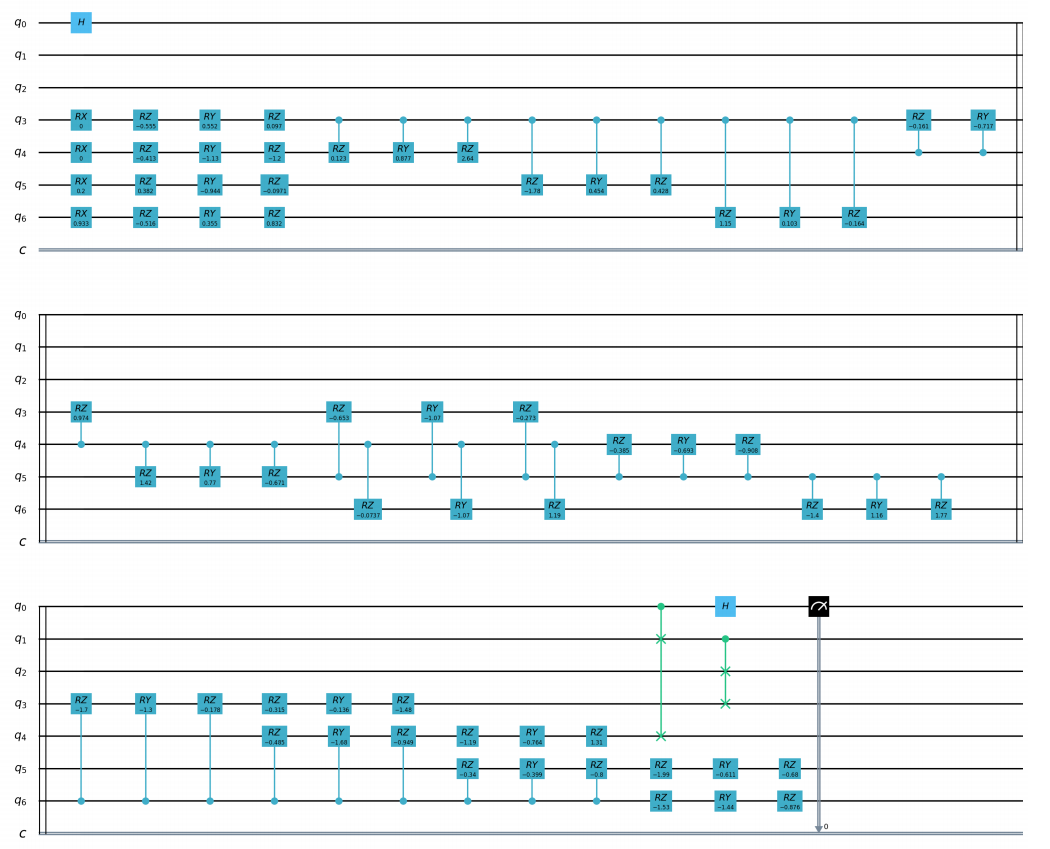

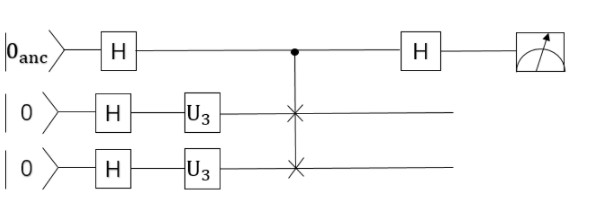

QAE量子线路:

"""

Quantum AutoEncoder demo

"""

import os

import sys

sys.path.insert(0,'../')

import numpy as np

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import fidelityLoss

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.qnn.qae.qae import QAElayer

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use('TkAgg')

except:

pass

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

url_base = 'http://yann.lecun.com/exdb/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

def _download(dataset_dir,file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

file_path_ungz = file_path_ungz.replace('-idx', '.idx')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir,v)

class Model(Module):

def __init__(self, trash_num: int = 2, total_num: int = 7):

super().__init__()

self.pqc = QAElayer(trash_num, total_num)

def forward(self, x):

x = self.pqc(x)

return x

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"): # 下载数据

import os, struct

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, 'train-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 'train-labels.idx1-ubyte').replace('\\', '/')

elif dataset == "testing_data":

fname_image = os.path.join(path, 't10k-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 't10k-labels.idx1-ubyte').replace('\\', '/')

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, 'rb')

magic_nr, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, 'rb')

magic_nr, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

N = len(ind)

images = np.zeros((N, rows, cols))

labels = np.zeros((N, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols: (ind[i] + 1) * rows * cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def run2():

##load dataset

x_train, y_train = load_mnist("training_data") # 下载训练数据

x_train = x_train / 255 # 将数据进行归一化处理[0,1]

x_test, y_test = load_mnist("testing_data")

x_test = x_test / 255

x_train = x_train.reshape([-1, 1, 28, 28])

x_test = x_test.reshape([-1, 1, 28, 28])

x_train = x_train[:100, :, :, :]

x_train = np.resize(x_train, [x_train.shape[0], 1, 2, 2])

x_test = x_test[:10, :, :, :]

x_test = np.resize(x_test, [x_test.shape[0], 1, 2, 2])

encode_qubits = 4

latent_qubits = 2

trash_qubits = encode_qubits - latent_qubits

total_qubits = 1 + trash_qubits + encode_qubits

print("model start")

model = Model(trash_qubits, total_qubits)

optimizer = Adam(model.parameters(), lr=0.005)

model.train()

F1 = open("rlt.txt", "w")

loss_list = []

loss_list_test = []

fidelity_train = []

fidelity_val = []

for epoch in range(1, 10):

running_fidelity_train = 0

running_fidelity_val = 0

print(f"epoch {epoch}")

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

correct = 0

iter = 0

if epoch %5 ==1:

optimizer.lr = optimizer.lr *0.5

for x, y in data_generator(x_train, y_train, batch_size=batch_size, shuffle=True): #shuffle batch rather than data

x = x.reshape((-1, encode_qubits))

x = np.concatenate((np.zeros([batch_size, 1 + trash_qubits]), x), 1)

optimizer.zero_grad()

output = model(x)

iter += 1

np_out = np.array(output.data)

floss = fidelityLoss()

loss = floss(output)

loss_data = np.array(loss.data)

loss.backward()

running_fidelity_train += np_out[0]

optimizer._step()

full_loss += loss_data[0]

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

loss_output = full_loss / n_loss

print(f"Epoch: {epoch}, Loss: {loss_output}")

loss_list.append(loss_output)

# Evaluation

model.eval()

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test, y_test, batch_size=batch_size, shuffle=True):

x = x.reshape((-1, encode_qubits))

x = np.concatenate((np.zeros([batch_size, 1 + trash_qubits]),x),1)

output = model(x)

floss = fidelityLoss()

loss = floss(output)

loss_data = np.array(loss.data)

full_loss += loss_data[0]

running_fidelity_val += np.array(output.data)[0]

n_eval += 1

n_loss += 1

loss_output = full_loss / n_loss

print(f"Epoch: {epoch}, Loss: {loss_output}")

loss_list_test.append(loss_output)

fidelity_train.append(running_fidelity_train / 64)

fidelity_val.append(running_fidelity_val / 64)

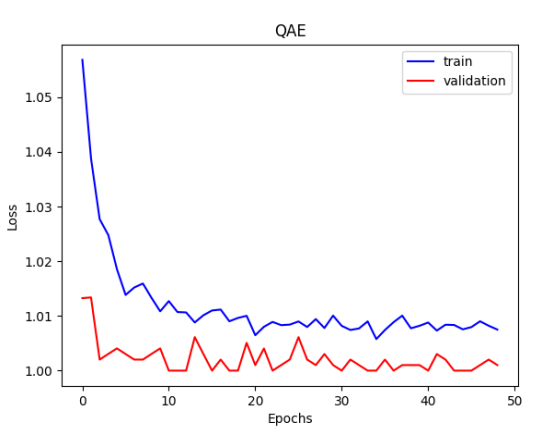

figure_path = os.path.join(os.getcwd(), 'QAE-rate1.png')

plt.plot(loss_list, color="blue", label="train")

plt.plot(loss_list_test, color="red", label="validation")

plt.title('QAE')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend(loc="upper right")

plt.savefig(figure_path)

plt.show()

F1.write(f"done\n")

F1.close()

del model

if __name__ == '__main__':

run2()

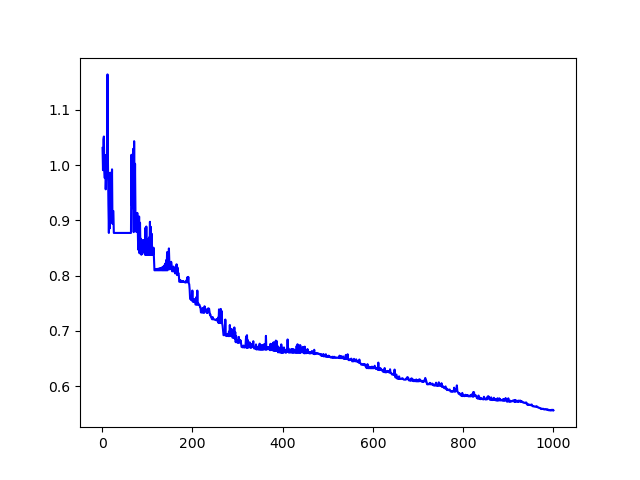

运行上述代码得到的QAE误差值,该loss为1/保真度,趋向于1表示保真度接近1。

量子线路结构学习¶

1.量子线路结构学习¶

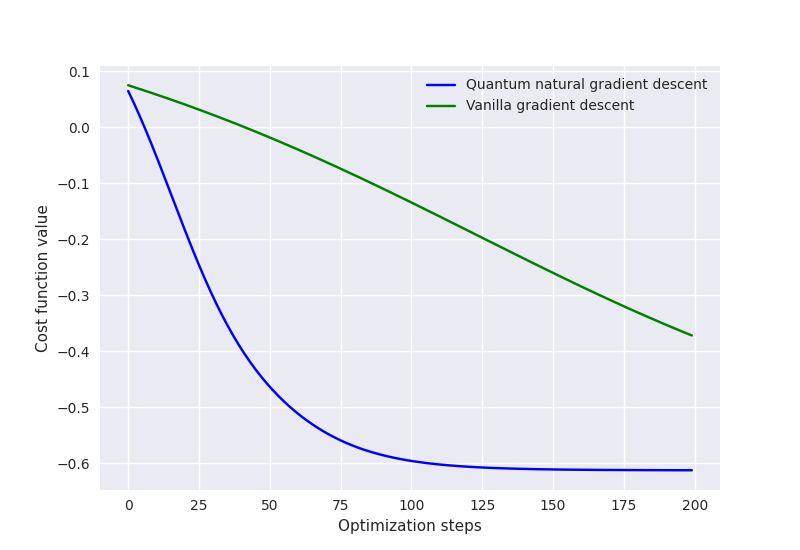

在量子线路结构中,最经常使用的带参数的量子门就是RZ、RY、RX门,但是在什么情况下使用什么门是一个十分值得研究的问题,一种方法就是随机选择,但是这种情况很有可能达不到最好的效果。 Quantum circuit structure learning任务的核心目标就是找到最优的带参量子门组合。 这里的做法是这一组最优的量子逻辑门要使得目标函数(loss function)取得最小值。

"""

Quantum Circuits Strcture Learning Demo

"""

import sys

sys.path.insert(0,"../")

import copy

import pyqpanda as pq

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.qnn.measure import expval

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

machine = pq.CPUQVM()

machine.init_qvm()

nqbits = machine.qAlloc_many(2)

def gen(param, generators, qbits, circuit):

if generators == "X":

circuit.insert(pq.RX(qbits, param))

elif generators == "Y":

circuit.insert(pq.RY(qbits, param))

else:

circuit.insert(pq.RZ(qbits, param))

def circuits(params, generators, circuit):

gen(params[0], generators[0], nqbits[0], circuit)

gen(params[1], generators[1], nqbits[1], circuit)

circuit.insert(pq.CNOT(nqbits[0], nqbits[1]))

prog = pq.QProg()

prog.insert(circuit)

return prog

def ansatz1(params: QTensor, generators):

circuit = pq.QCircuit()

params = params.to_numpy()

prog = circuits(params, generators, circuit)

return expval(machine, prog, {"Z0": 1},

nqbits), expval(machine, prog, {"Y1": 1}, nqbits)

def ansatz2(params: QTensor, generators):

circuit = pq.QCircuit()

params = params.to_numpy()

prog = circuits(params, generators, circuit)

return expval(machine, prog, {"X0": 1}, nqbits)

def loss(params, generators):

z, y = ansatz1(params, generators)

x = ansatz2(params, generators)

return 0.5 * y + 0.8 * z - 0.2 * x

def rotosolve(d, params, generators, cost, M_0):#pylint:disable=invalid-name

"""

rotosolve algorithm implementation

"""

params[d] = np.pi / 2.0

m0_plus = cost(QTensor(params), generators)

params[d] = -np.pi / 2.0

m0_minus = cost(QTensor(params), generators)

a = np.arctan2(2.0 * M_0 - m0_plus - m0_minus,

m0_plus - m0_minus) # returns value in (-pi,pi]

params[d] = -np.pi / 2.0 - a

if params[d] <= -np.pi:

params[d] += 2 * np.pi

return cost(QTensor(params), generators)

def optimal_theta_and_gen_helper(index, params, generators):

"""

find optimal varaibles

"""

params[index] = 0.

m0 = loss(QTensor(params), generators) #init value

for kind in ["X", "Y", "Z"]:

generators[index] = kind

params_cost = rotosolve(index, params, generators, loss, m0)

if kind == "X" or params_cost <= params_opt_cost:

params_opt_d = params[index]

params_opt_cost = params_cost

generators_opt_d = kind

return params_opt_d, generators_opt_d

def rotoselect_cycle(params: np, generators):

for index in range(params.shape[0]):

params[index], generators[index] = optimal_theta_and_gen_helper(

index, params, generators)

return params, generators

params = QTensor(np.array([0.3, 0.25]))

params = params.to_numpy()

generator = ["X", "Y"]

generators = copy.deepcopy(generator)

epoch = 20

state_save = []

for i in range(epoch):

state_save.append(loss(QTensor(params), generators))

params, generators = rotoselect_cycle(params, generators)

print("Optimal generators are: {}".format(generators))

print("Optimal params are: {}".format(params))

steps = np.arange(0, epoch)

plt.plot(steps, state_save, "o-")

plt.title("rotoselect")

plt.xlabel("cycles")

plt.ylabel("cost")

plt.yticks(np.arange(-1.25, 0.80, 0.25))

plt.tight_layout()

plt.show()

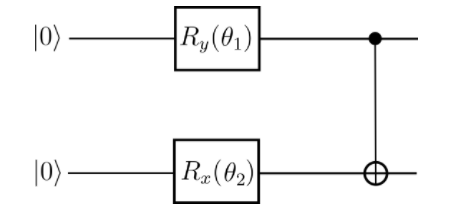

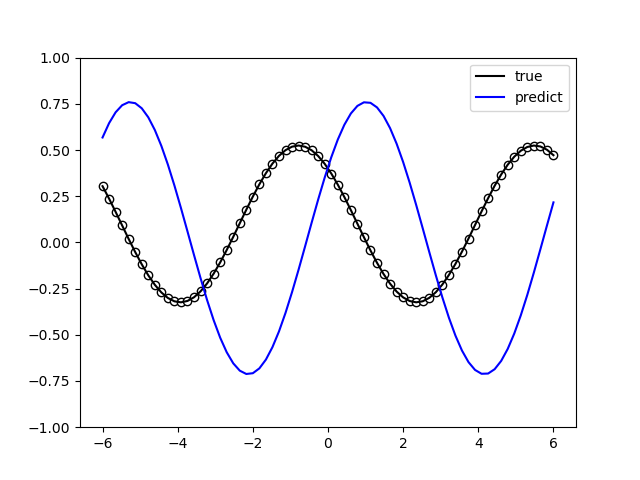

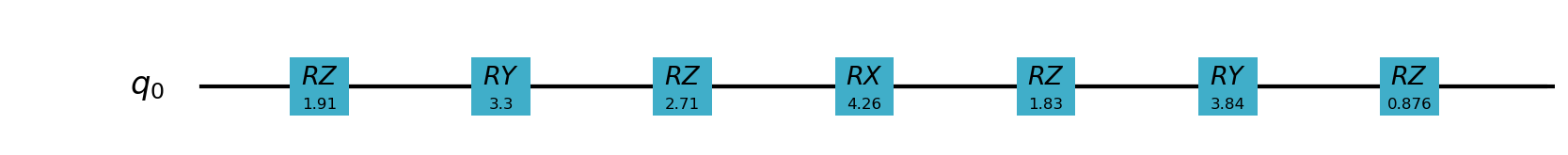

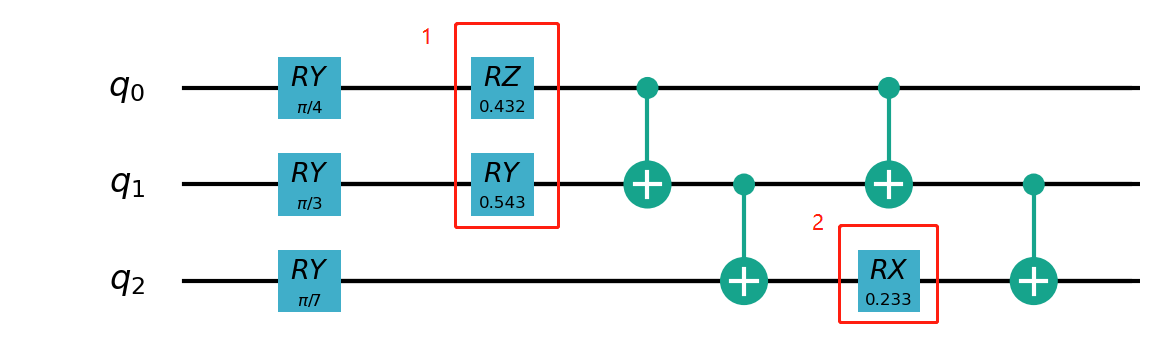

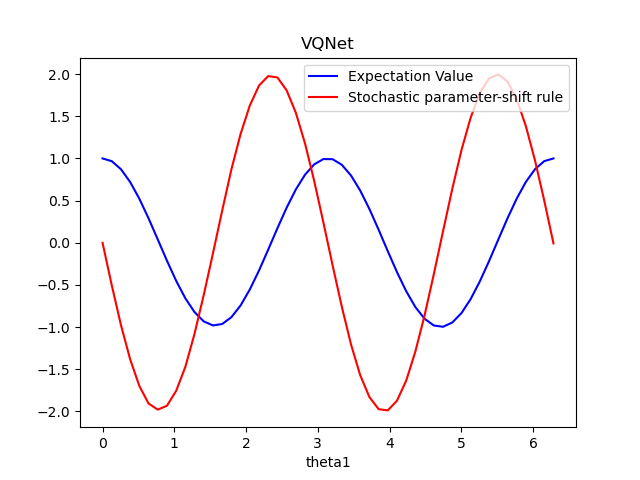

运行上述代码得到的量子线路结构。可见为一个 \(RX\),一个 \(RY\)

以及逻辑门中的参数 \(\theta_1\), \(\theta_2\) 不同参数下的损失函数

量子经典神经网络混合模型¶

1.混合量子经典神经网络模型¶

机器学习 (ML) 已成为一个成功的跨学科领域,旨在从数据中以数学方式提取可概括的信息。量子机器学习寻求利用量子力学原理来增强机器学习,反之亦然。 无论您的目标是通过将困难的计算外包给量子计算机来增强经典 ML 算法,还是使用经典 ML 架构优化量子算法——两者都属于量子机器学习 (QML) 的范畴。 在本章中,我们将探讨如何部分量化经典神经网络以创建混合量子经典神经网络。量子线路由量子逻辑门构成,这些逻辑门实现的量子计算被论文 Quantum Circuit Learning 证明是可微分。因此研究者尝试将量子线路与经典神经网络模块放到一起同时进行混合量子经典机器学习任务的训练。 我们将编写一个简单的示例,使用VQNet实现一个神经网络模型训练任务。此示例的目的是展示VQNet的简便性,并鼓励 ML 从业者探索量子计算的可能性。

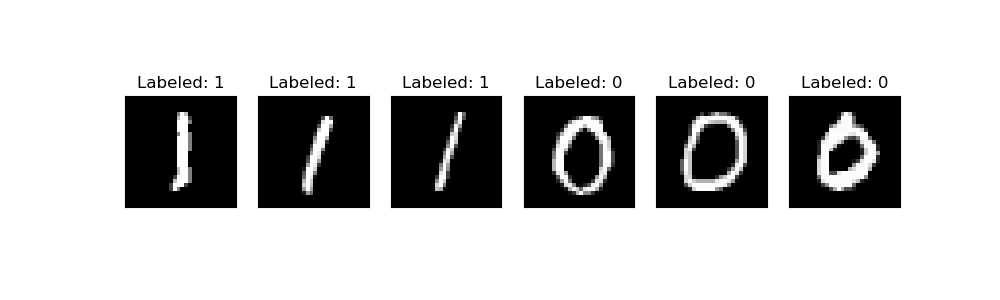

数据准备¶

我们将使用 MNIST datasets 这一神经网络最基础的手写数字数据库作为分类数据 。 我们首先加载MNIST并过滤包含0和1的数据样本。这些样本分为训练数据 training_data 和测试数据 testing_data,它们每条数据均为1*784的维度大小。

import time

import os

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.tensor import QTensor

import pyqpanda as pq

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = 'http://yann.lecun.com/exdb/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

def _download(dataset_dir,file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

file_path_ungz = file_path_ungz.replace('-idx', '.idx')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir,v)

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"): # 下载数据

import os, struct

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, 'train-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 'train-labels.idx1-ubyte').replace('\\', '/')

elif dataset == "testing_data":

fname_image = os.path.join(path, 't10k-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 't10k-labels.idx1-ubyte').replace('\\', '/')

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, 'rb')

magic_nr, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, 'rb')

magic_nr, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

N = len(ind)

images = np.zeros((N, rows, cols))

labels = np.zeros((N, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols: (ind[i] + 1) * rows * cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def data_select(train_num, test_num):

x_train, y_train = load_mnist("training_data")

x_test, y_test = load_mnist("testing_data")

# Train Leaving only labels 0 and 1

idx_train = np.append(np.where(y_train == 0)[0][:train_num],

np.where(y_train == 1)[0][:train_num])

x_train = x_train[idx_train]

y_train = y_train[idx_train]

x_train = x_train / 255

y_train = np.eye(2)[y_train].reshape(-1, 2)

# Test Leaving only labels 0 and 1

idx_test = np.append(np.where(y_test == 0)[0][:test_num],

np.where(y_test == 1)[0][:test_num])

x_test = x_test[idx_test]

y_test = y_test[idx_test]

x_test = x_test / 255

y_test = np.eye(2)[y_test].reshape(-1, 2)

return x_train, y_train, x_test, y_test

n_samples_show = 6

x_train, y_train, x_test, y_test = data_select(100, 50)

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

for img ,targets in zip(x_test,y_test):

if n_samples_show <= 3:

break

if targets[0] == 1:

axes[n_samples_show - 1].set_title("Labeled: 0")

axes[n_samples_show - 1].imshow(img.squeeze(), cmap='gray')

axes[n_samples_show - 1].set_xticks([])

axes[n_samples_show - 1].set_yticks([])

n_samples_show -= 1

for img ,targets in zip(x_test,y_test):

if n_samples_show <= 0:

break

if targets[0] == 0:

axes[n_samples_show - 1].set_title("Labeled: 1")

axes[n_samples_show - 1].imshow(img.squeeze(), cmap='gray')

axes[n_samples_show - 1].set_xticks([])

axes[n_samples_show - 1].set_yticks([])

n_samples_show -= 1

plt.show()

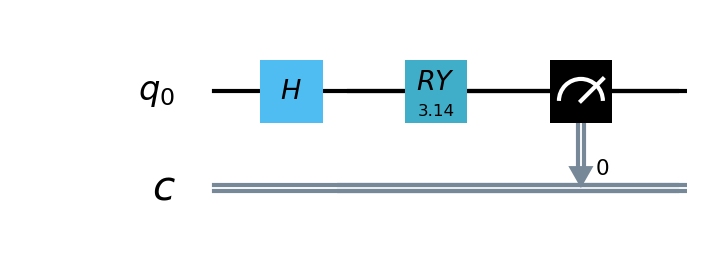

构建量子线路¶

在本例中,我们使用本源量子的 pyQPanda

定义了一个1量子比特的简单量子线路,该线路将经典神经网络层的输出作为输入,通过 H, RY 逻辑门进行量子数据编码,并计算z方向的哈密顿期望值作为输出。

from pyqpanda import *

import pyqpanda as pq

import numpy as np

def circuit(weights):

num_qubits = 1

#pyQPanda 创建模拟器

machine = pq.CPUQVM()

machine.init_qvm()

#pyQPanda 分配量子比特

qubits = machine.qAlloc_many(num_qubits)

#pyQPanda 分配经典比特辅助测量

cbits = machine.cAlloc_many(num_qubits)

#构建线路

circuit = pq.QCircuit()

circuit.insert(pq.H(qubits[0]))

circuit.insert(pq.RY(qubits[0], weights[0]))

prog = pq.QProg()

prog.insert(circuit)

prog << measure_all(qubits, cbits)

#运行量子程序

result = machine.run_with_configuration(prog, cbits, 100)

counts = np.array(list(result.values()))

states = np.array(list(result.keys())).astype(float)

probabilities = counts / 100

expectation = np.sum(states * probabilities)

return expectation

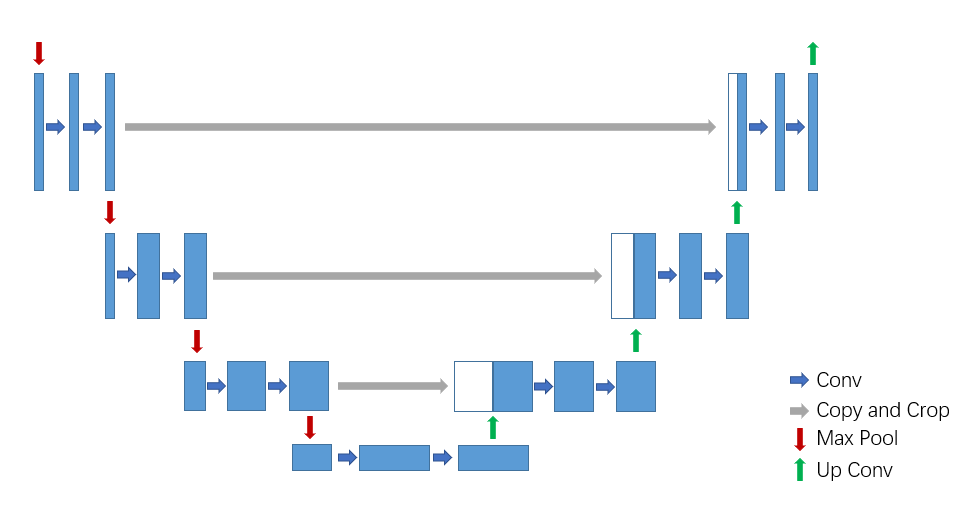

构建混合量子神经网络¶

由于量子线路可以和经典神经网络一起进行自动微分的计算,

因此我们可以使用VQNet的2维卷积层 Conv2D ,池化层 MaxPool2D ,全连接层 Linear 以及刚才构建的量子线路circuit构建模型。

通过以下代码中继承于VQNet自动微分模块 Module 的 Net 以及 Hybrid 类的定义,以及模型前传函数 forward() 中对数据前向计算的定义,我们构建了一个可以自动微分的模型

将本例中MNIST的数据进行卷积,降维,量子编码,测量,获取分类任务所需的最终特征。

#量子计算层的前传和梯度计算函数的定义,其需要继承于抽象类Module

class Hybrid(Module):

""" Hybrid quantum - Quantum layer definition """

def __init__(self, shift):

super(Hybrid, self).__init__()

self.shift = shift

def forward(self, input):

self.input = input

expectation_z = circuit(np.array(input.data))

result = [[expectation_z]]

requires_grad = input.requires_grad

def _backward(g, input):

""" Backward pass computation """

input_list = np.array(input.data)

shift_right = input_list + np.ones(input_list.shape) * self.shift

shift_left = input_list - np.ones(input_list.shape) * self.shift

gradients = []

for i in range(len(input_list)):

expectation_right = circuit(shift_right[i])

expectation_left = circuit(shift_left[i])

gradient = expectation_right - expectation_left

gradients.append(gradient)

gradients = np.array([gradients]).T

return gradients * np.array(g)

nodes = []

if input.requires_grad:

nodes.append(QTensor.GraphNode(tensor=input, df=lambda g: _backward(g, input)))

return QTensor(data=result, requires_grad=requires_grad, nodes=nodes)

#模型定义

class Net(Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = Conv2D(input_channels=1, output_channels=6, kernel_size=(5, 5), stride=(1, 1), padding="valid")

self.maxpool1 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.conv2 = Conv2D(input_channels=6, output_channels=16, kernel_size=(5, 5), stride=(1, 1), padding="valid")

self.maxpool2 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.fc1 = Linear(input_channels=256, output_channels=64)

self.fc2 = Linear(input_channels=64, output_channels=1)

self.hybrid = Hybrid(np.pi / 2)

self.fc3 = Linear(input_channels=1, output_channels=2)

def forward(self, x):

x = F.ReLu()(self.conv1(x)) # 1 6 24 24

x = self.maxpool1(x)

x = F.ReLu()(self.conv2(x)) # 1 16 8 8

x = self.maxpool2(x)

x = tensor.flatten(x, 1) # 1 256

x = F.ReLu()(self.fc1(x)) # 1 64

x = self.fc2(x) # 1 1

x = self.hybrid(x)

x = self.fc3(x)

return x

训练和测试¶

通过上面代码示例,我们已经定义了模型。与经典神经网络模型训练类似, 我们还需要做的是实例化该模型,定义损失函数以及优化器以及定义整个训练测试流程。 对于形如下图的混合神经网络模型,我们通过循环输入数据前向计算损失值,并在反向计算中自动计算出各个待训练参数的梯度,并使用优化器进行参数优化,直到迭代次数满足预设值。

x_train, y_train, x_test, y_test = data_select(1000, 100)

#实例化

model = Net()

#使用Adam完成此任务就足够了,model.parameters()是模型需要计算的参数。

optimizer = Adam(model.parameters(), lr=0.005)

#分类任务使用交叉熵函数

loss_func = CategoricalCrossEntropy()

#训练次数

epochs = 10

train_loss_list = []

val_loss_list = []

train_acc_list =[]

val_acc_list = []

for epoch in range(1, epochs):

total_loss = []

model.train()

batch_size = 1

correct = 0

n_train = 0

for x, y in data_generator(x_train, y_train, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_train += batch_size

loss.backward()

optimizer._step()

total_loss.append(loss_np)

train_loss_list.append(np.sum(total_loss) / len(total_loss))

train_acc_list.append(np.sum(correct) / n_train)

print("{:.0f} loss is : {:.10f}".format(epoch, train_loss_list[-1]))

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

total_loss.append(loss_np)

print(f"Eval Accuracy: {correct / n_eval}")

val_loss_list.append(np.sum(total_loss) / len(total_loss))

val_acc_list.append(np.sum(correct) / n_eval)

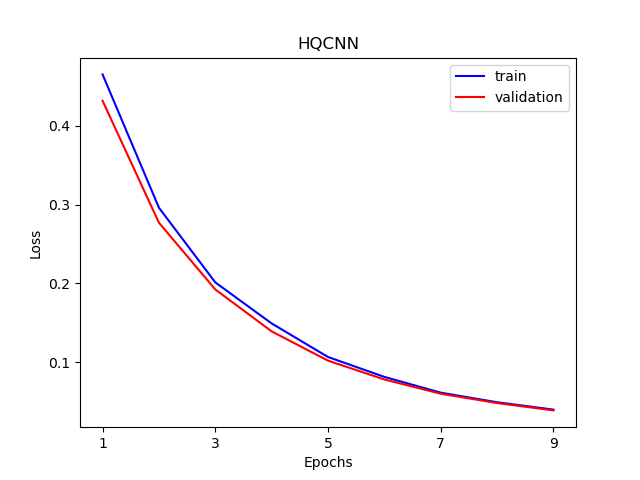

数据可视化¶

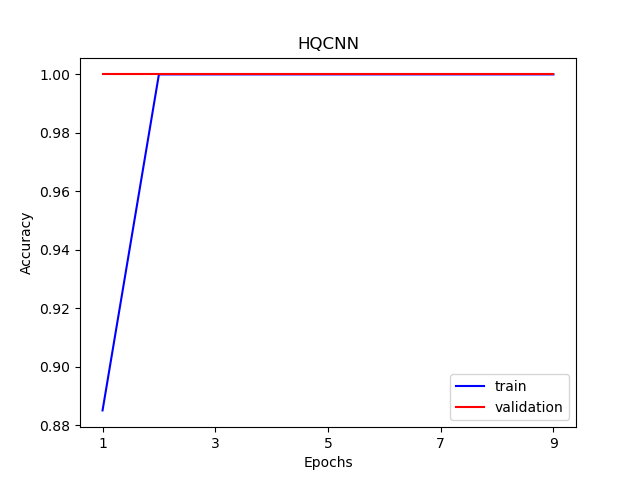

训练和测试数据上的数据损失函数与准确率的可视化曲线。

import os

plt.figure()

xrange = range(1,len(train_loss_list)+1)

figure_path = os.path.join(os.getcwd(), 'HQCNN LOSS.png')

plt.plot(xrange,train_loss_list, color="blue", label="train")

plt.plot(xrange,val_loss_list, color="red", label="validation")

plt.title('HQCNN')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.xticks(np.arange(1, epochs +1,step = 2))

plt.legend(loc="upper right")

plt.savefig(figure_path)

plt.show()

plt.figure()

figure_path = os.path.join(os.getcwd(), 'HQCNN Accuracy.png')

plt.plot(xrange,train_acc_list, color="blue", label="train")

plt.plot(xrange,val_acc_list, color="red", label="validation")

plt.title('HQCNN')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.xticks(np.arange(1, epochs +1,step = 2))

plt.legend(loc="lower right")

plt.savefig(figure_path)

plt.show()

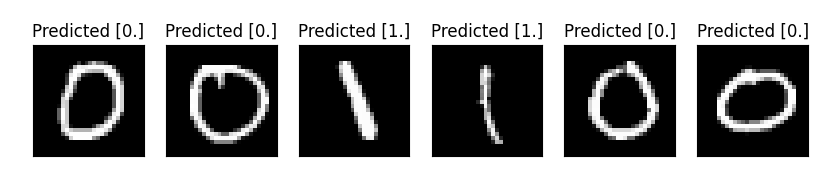

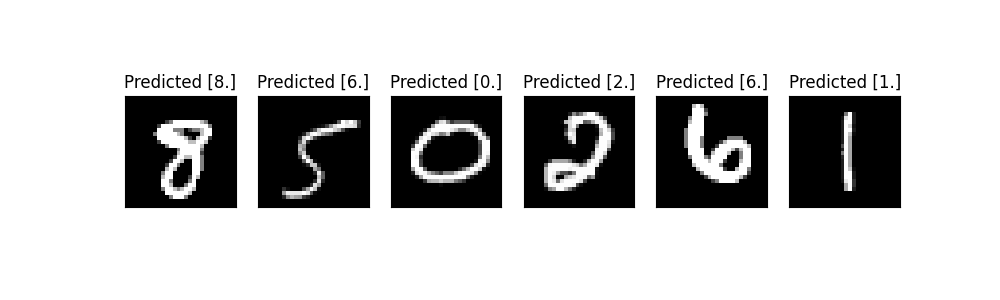

n_samples_show = 6

count = 0

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap='gray')

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title('Predicted {}'.format(np.array(pred.data)))

count += 1

plt.show()

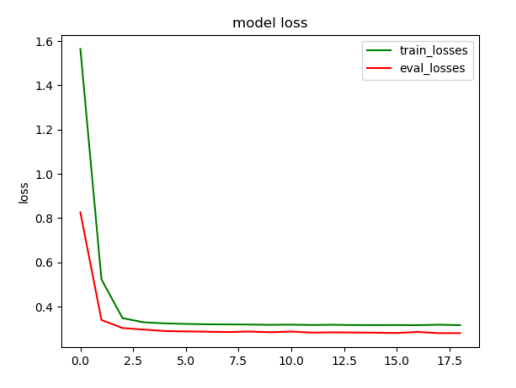

2.混合量子经典迁移学习模型¶

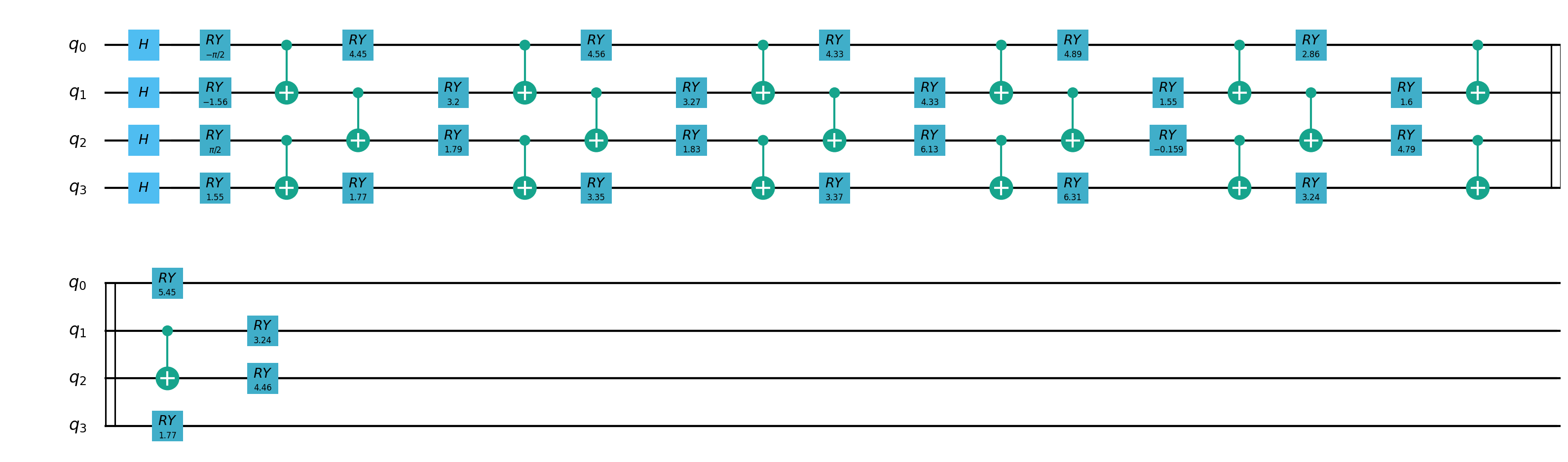

我们将一种称为迁移学习的机器学习方法应用于基于混合经典量子网络的图像分类器。我们将编写一个将pyQPanda与VQNet集成的简单示例。 迁移学习是一种成熟的人工神经网络训练技术,它基于一般直觉,即如果预训练的网络擅长解决给定的问题,那么,只需一些额外的训练,它也可以用来解决一个不同但相关的问题。

量子部分线路图

"""

Quantum Classic Nerual Network Transfer Learning demo

"""

import os

import os.path

import gzip

import struct

import numpy as np

import sys

sys.path.insert(0,"../")

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.utils.storage import load_parameters, save_parameters

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.loss import SoftmaxCrossEntropy

from pyvqnet.optim.sgd import SGD

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.qnn.quantumlayer import QuantumLayer

import pyqpanda as pq

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except: #pylint:disable=bare-except

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = "http://yann.lecun.com/exdb/mnist/"

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download dataset

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

class CNN(Module):

"""

Classical CNN

"""

def __init__(self):

super(CNN, self).__init__()

self.conv1 = Conv2D(input_channels=1,

output_channels=16,

kernel_size=(3, 3),

stride=(1, 1),

padding="valid")

self.relu1 = F.ReLu()

self.conv2 = Conv2D(input_channels=16,

output_channels=32,

kernel_size=(3, 3),

stride=(1, 1),

padding="valid")

self.relu2 = F.ReLu()

self.maxpool2 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.conv3 = Conv2D(input_channels=32,

output_channels=64,

kernel_size=(3, 3),

stride=(1, 1),

padding="valid")

self.relu3 = F.ReLu()

self.conv4 = Conv2D(input_channels=64,

output_channels=128,

kernel_size=(3, 3),

stride=(1, 1),

padding="valid")

self.relu4 = F.ReLu()

self.maxpool4 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.fc1 = Linear(input_channels=128 * 4 * 4, output_channels=1024)

self.fc2 = Linear(input_channels=1024, output_channels=128)

self.fc3 = Linear(input_channels=128, output_channels=10)

def forward(self, x):

x = self.relu1(self.conv1(x))

x = self.maxpool2(self.relu2(self.conv2(x)))

x = self.relu3(self.conv3(x))

x = self.maxpool4(self.relu4(self.conv4(x)))

x = tensor.flatten(x, 1)

x = F.ReLu()(self.fc1(x))

x = F.ReLu()(self.fc2(x))

x = self.fc3(x)

return x

def load_mnist(dataset="training_data",

digits=np.arange(2),

path="./"):

"""

Load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols))

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

train_size = 50

eval_size = 2

EPOCHES = 10

def classcal_cnn_model_training():

"""

load train data

"""

x_train, y_train = load_mnist("training_data", digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:train_size]

y_train = y_train[:train_size]

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

optimizer = SGD(model.parameters(), lr=0.005)

loss_func = SoftmaxCrossEntropy()

epochs = EPOCHES

loss_list = []

model.train()

save_flag = True

temp_loss = 0

for epoch in range(1, epochs):

total_loss = []

for x, y in data_generator(x_train,

y_train,

batch_size=4,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

# Forward pass

output = model(x)

# Calculating loss

loss = loss_func(y, output) # target output

loss_np = np.array(loss.data)

# Backward pass

loss.backward()

# Optimize the weights

optimizer._step()

total_loss.append(loss_np)

loss_list.append(np.sum(total_loss) / len(total_loss))

print("{:.0f} loss is : {:.10f}".format(epoch, loss_list[-1]))

if save_flag:

temp_loss = loss_list[-1]

save_parameters(model.state_dict(), "./result/QCNN_TL_1.model")

save_flag = False

else:

if temp_loss > loss_list[-1]:

temp_loss = loss_list[-1]

save_parameters(model.state_dict(), "./result/QCNN_TL_1.model")

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=4, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = loss_func(y, output)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

print(f"Eval Accuracy: {correct / n_eval}")

n_samples_show = 6

count = 0

_, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap="gray")

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title("Predicted {}".format(np.array(pred.data)))

count += 1

plt.show()

def classical_cnn_transferlearning_predict():

"""

Use test data to eval classic NN model

"""

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_test = x_test / 255

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

model_parameter = load_parameters("./result/QCNN_TL_1.model")

model.load_state_dict(model_parameter)

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

print(f"Eval Accuracy: {correct / n_eval}")

n_samples_show = 6

count = 0

_, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap="gray")

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title("Predicted {}".format(np.array(pred.data)))

count += 1

plt.show()

n_qubits = 4 # Number of qubits

q_depth = 6 # Depth of the quantum circuit (number of variational layers)

def Q_H_layer(qubits, nqubits):#pylint:disable=invalid-name

"""Layer of single-qubit Hadamard gates.

"""

circuit = pq.QCircuit()

for idx in range(nqubits):

circuit.insert(pq.H(qubits[idx]))

return circuit

def Q_RY_layer(qubits, w):#pylint:disable=invalid-name

"""

Layer of parametrized qubit rotations around the y axis.

"""

circuit = pq.QCircuit()

for idx, element in enumerate(w):

circuit.insert(pq.RY(qubits[idx], element))

return circuit

def Q_entangling_layer(qubits, nqubits):#pylint:disable=invalid-name

"""

Layer of CNOTs followed by another shifted layer of CNOT.

"""

# In other words it should apply something like :

# CNOT CNOT CNOT CNOT... CNOT

# CNOT CNOT CNOT... CNOT

circuit = pq.QCircuit()

for i in range(0, nqubits - 1,

2): # Loop over even indices: i=0,2,...N-2

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

for i in range(1, nqubits - 1,

2): # Loop over odd indices: i=1,3,...N-3

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

return circuit

def quantum_net(q_input_features, q_weights_flat, qubits, cubits,#pylint:disable=unused-argument

machine):

"""

The variational quantum circuit.

"""

machine = pq.CPUQVM()

machine.init_qvm()

qubits = machine.qAlloc_many(n_qubits)

circuit = pq.QCircuit()

# Reshape weights

q_weights = q_weights_flat.reshape([q_depth, n_qubits])

# Start from state |+> , unbiased w.r.t. |0> and |1>

circuit.insert(Q_H_layer(qubits, n_qubits))

# Embed features in the quantum node

circuit.insert(Q_RY_layer(qubits, q_input_features))

# Sequence of trainable variational layers

for k in range(q_depth):

circuit.insert(Q_entangling_layer(qubits, n_qubits))

circuit.insert(Q_RY_layer(qubits, q_weights[k]))

# Expectation values in the Z basis

prog = pq.QProg()

prog.insert(circuit)

exp_vals = []

for position in range(n_qubits):

pauli_str = "Z" + str(position)

pauli_map = pq.PauliOperator(pauli_str, 1)

hamiltion = pauli_map.toHamiltonian(True)

exp = machine.get_expectation(prog, hamiltion, qubits)

exp_vals.append(exp)

return exp_vals

def quantum_cnn_transferlearning():

"""

The quantum cnn transferLearning model main function

"""

class Q_DressedQuantumNet(Module):#pylint:disable=invalid-name

"""

module implementing the *dressed* quantum net.

"""

def __init__(self):

"""

Definition of the *dressed* layout.

"""

super().__init__()

self.pre_net = Linear(128, n_qubits)

self.post_net = Linear(n_qubits, 10)

self.qlayer = QuantumLayer(quantum_net, q_depth * n_qubits,

"cpu", n_qubits, n_qubits)

def forward(self, input_features):

"""

Defining how tensors are supposed to move through the *dressed* quantum

net.

"""

# obtain the input features for the quantum circuit

# by reducing the feature dimension from 512 to 4

pre_out = self.pre_net(input_features)

q_in = tensor.tanh(pre_out) * np.pi / 2.0

q_out_elem = self.qlayer(q_in)

result = q_out_elem

# return the two-dimensional prediction from the postprocessing layer

return self.post_net(result)

x_train, y_train = load_mnist("training_data",

digits=np.arange(10)) # 下载训练数据

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:train_size]

y_train = y_train[:train_size]

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

model_param = load_parameters("./result/QCNN_TL_1.model")

model.load_state_dict(model_param)

loss_func = SoftmaxCrossEntropy()

epochs = EPOCHES

loss_list = []

eval_losses = []

model_hybrid = model

print(model_hybrid)

for param in model_hybrid.parameters():

param.requires_grad = False

model_hybrid.fc3 = Q_DressedQuantumNet()

optimizer_hybrid = Adam(model_hybrid.fc3.parameters(), lr=0.001)

model_hybrid.train()

save_flag = True

temp_loss = 0

for epoch in range(1, epochs):

total_loss = []

for x, y in data_generator(x_train,

y_train,

batch_size=4,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer_hybrid.zero_grad()

# Forward pass

output = model_hybrid(x)

loss = loss_func(y, output) # target output

loss_np = np.array(loss.data)

# Backward pass

loss.backward()

# Optimize the weights

optimizer_hybrid._step()

total_loss.append(loss_np)

loss_list.append(np.sum(total_loss) / len(total_loss))

print("{:.0f} loss is : {:.10f}".format(epoch, loss_list[-1]))

if save_flag:

temp_loss = loss_list[-1]

save_parameters(model_hybrid.fc3.state_dict(),

"./result/QCNN_TL_FC3.model")

save_parameters(model_hybrid.state_dict(),

"./result/QCNN_TL_ALL.model")

save_flag = False

else:

if temp_loss > loss_list[-1]:

temp_loss = loss_list[-1]

save_parameters(model_hybrid.fc3.state_dict(),

"./result/QCNN_TL_FC3.model")

save_parameters(model_hybrid.state_dict(),

"./result/QCNN_TL_ALL.model")

correct = 0

n_eval = 0

loss_temp = []

for x1, y1 in data_generator(x_test,

y_test,

batch_size=4,

shuffle=True):

x1 = x1.reshape(-1, 1, 28, 28)

output = model_hybrid(x1)

loss = loss_func(y1, output)

np_loss = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y1.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

loss_temp.append(np_loss)

eval_losses.append(np.sum(loss_temp) / n_eval)

print("{:.0f} eval loss is : {:.10f}".format(epoch, eval_losses[-1]))

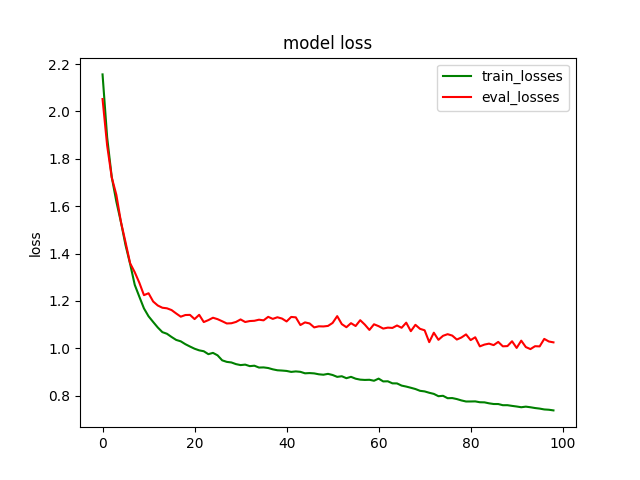

plt.title("model loss")

plt.plot(loss_list, color="green", label="train_losses")

plt.plot(eval_losses, color="red", label="eval_losses")

plt.ylabel("loss")

plt.legend(["train_losses", "eval_losses"])

plt.savefig("qcnn_transfer_learning_classical")

plt.show()

plt.close()

n_samples_show = 6

count = 0

_, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model_hybrid.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model_hybrid(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap="gray")

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title("Predicted {}".format(np.array(pred.data)))

count += 1

plt.show()

def quantum_cnn_transferlearning_predict():

"""

Eval quantum cnn transferlearning model on test data

"""

n_qubits = 4 # Number of qubits

q_depth = 6 # Depth of the quantum circuit (number of variational layers)

def Q_H_layer(qubits, nqubits):#pylint:disable=invalid-name

"""Layer of single-qubit Hadamard gates.

"""

circuit = pq.QCircuit()

for idx in range(nqubits):

circuit.insert(pq.H(qubits[idx]))

return circuit

def Q_RY_layer(qubits, w):#pylint:disable=invalid-name

"""Layer of parametrized qubit rotations around the y axis.

"""

circuit = pq.QCircuit()

for idx, element in enumerate(w):

circuit.insert(pq.RY(qubits[idx], element))

return circuit

def Q_entangling_layer(qubits, nqubits):#pylint:disable=invalid-name

"""Layer of CNOTs followed by another shifted layer of CNOT.

"""

# In other words it should apply something like :

# CNOT CNOT CNOT CNOT... CNOT

# CNOT CNOT CNOT... CNOT

circuit = pq.QCircuit()

for i in range(0, nqubits - 1,

2): # Loop over even indices: i=0,2,...N-2

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

for i in range(1, nqubits - 1,

2): # Loop over odd indices: i=1,3,...N-3

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

return circuit

def quantum_net(q_input_features, q_weights_flat, qubits, cubits,#pylint:disable=unused-argument

machine):

"""

The variational quantum circuit.

"""

machine = pq.CPUQVM()

machine.init_qvm()

qubits = machine.qAlloc_many(n_qubits)

circuit = pq.QCircuit()

# Reshape weights

q_weights = q_weights_flat.reshape([q_depth, n_qubits])

# Start from state |+> , unbiased w.r.t. |0> and |1>

circuit.insert(Q_H_layer(qubits, n_qubits))

# Embed features in the quantum node

circuit.insert(Q_RY_layer(qubits, q_input_features))

# Sequence of trainable variational layers

for k in range(q_depth):

circuit.insert(Q_entangling_layer(qubits, n_qubits))

circuit.insert(Q_RY_layer(qubits, q_weights[k]))

# Expectation values in the Z basis

prog = pq.QProg()

prog.insert(circuit)

exp_vals = []

for position in range(n_qubits):

pauli_str = "Z" + str(position)

pauli_map = pq.PauliOperator(pauli_str, 1)

hamiltion = pauli_map.toHamiltonian(True)

exp = machine.get_expectation(prog, hamiltion, qubits)

exp_vals.append(exp)

return exp_vals

class Q_DressedQuantumNet(Module):

"""

module implementing the *dressed* quantum net.

"""

def __init__(self):

"""

Definition of the *dressed* layout.

"""

super().__init__()

self.pre_net = Linear(128, n_qubits)

self.post_net = Linear(n_qubits, 10)

self.qlayer = QuantumLayer(quantum_net, q_depth * n_qubits,

"cpu", n_qubits, n_qubits)

def forward(self, input_features):

"""

Defining how tensors are supposed to move through the *dressed* quantum

net.

"""

# obtain the input features for the quantum circuit

# by reducing the feature dimension from 512 to 4

pre_out = self.pre_net(input_features)

q_in = tensor.tanh(pre_out) * np.pi / 2.0

q_out_elem = self.qlayer(q_in)

result = q_out_elem

# return the two-dimensional prediction from the postprocessing layer

return self.post_net(result)

x_train, y_train = load_mnist("training_data",

digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:2000]

y_train = y_train[:2000]

x_test = x_test[:500]

y_test = y_test[:500]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

# The second method: unified storage and unified reading

model = CNN()

model_hybrid = model

model_hybrid.fc3 = Q_DressedQuantumNet()

for param in model_hybrid.parameters():

param.requires_grad = False

model_param_quantum = load_parameters("./result/QCNN_TL_ALL.model")

model_hybrid.load_state_dict(model_param_quantum)

model_hybrid.eval()

loss_func = SoftmaxCrossEntropy()

eval_losses = []

correct = 0

n_eval = 0

loss_temp = []

eval_batch_size = 4

for x1, y1 in data_generator(x_test,

y_test,

batch_size=eval_batch_size,

shuffle=True):

x1 = x1.reshape(-1, 1, 28, 28)

output = model_hybrid(x1)

loss = loss_func(y1, output)

np_loss = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y1.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

loss_temp.append(np_loss)

eval_losses.append(np.sum(loss_temp) / n_eval)

print(f"Eval Accuracy: {correct / (eval_batch_size*n_eval)}")

n_samples_show = 6

count = 0

_, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model_hybrid.eval()

for x, _ in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model_hybrid(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap="gray")

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title("Predicted {}".format(np.array(pred.data)))

count += 1

plt.show()

if __name__ == "__main__":

if not os.path.exists("./result/QCNN_TL_1.model"):

classcal_cnn_model_training()

classical_cnn_transferlearning_predict()

#train quantum circuits.

quantum_cnn_transferlearning()

#eval quantum circuits.

quantum_cnn_transferlearning_predict()

训练集上Loss情况

测试集上运行分类情况

3.混合量子经典的QUnet网络模型¶

图像分割(Image Segmeation)是计算机视觉研究中的一个经典难题,已经成为图像理解领域关注的一个热点,图像分割是图像分析的第一步,是计算机视觉的基础, 是图像理解的重要组成部分,同时也是图像处理中最困难的问题之一。所谓图像分割是指根据灰度、彩色、空间纹理、几何形状等特征把图像 划分成若干个互不相交的区域,使得这些特征在同一区域内表现出一致性或相似性,而在不同区域间表现出明显的不同。 简单而言就是给定一张图片,对图片上的每一个像素点分类。将不同分属不同物体的像素区域分开。 Unet 是一种用于解决经典图像分割的算法。 在这里我们探索如何将经典神经网络部分量化,以创建适合量子数据的 QUnet - Quantum Unet 神经网络。我们将编写一个将 pyQPanda 与 VQNet 集成的简单示例。 QUnet主要是用于解决图像分割的技术。

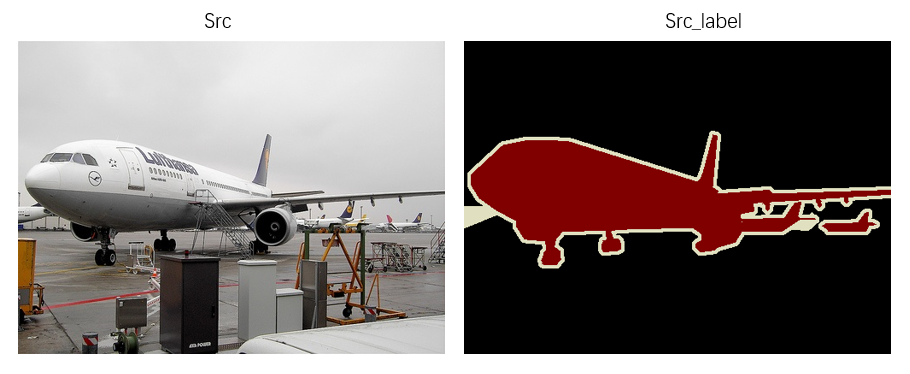

数据准备¶

我们将使用VOCdevkit/VOC2012官方库的数据: VOC2012 , 作为图像分割数据。 这些样本分为训练数据 training_data 和测试数据 testing_data,文件夹中包含images 和 labels。

构建量子线路¶

在本例中,我们使用本源量子的 pyQPanda 定义了一个量子线路。将输入的3通道彩色图片数据压缩为单通道的灰度图片并进行存储, 再利用量子卷积操作对数据的特征进行提取降维操作。

导入必须的库和函数

import os

import numpy as np

from pyvqnet.nn.module import Module

from pyvqnet.nn.conv import Conv2D, ConvT2D

from pyvqnet.nn import activation as F

from pyvqnet.nn.batch_norm import BatchNorm2d

from pyvqnet.nn.loss import BinaryCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.dtype import *

from pyvqnet.tensor import tensor

from pyvqnet.tensor.tensor import QTensor

import pyqpanda as pq

from pyqpanda import *

from pyvqnet.utils.storage import load_parameters, save_parameters

import matplotlib

try:

matplotlib.use('TkAgg')

except:

pass

import matplotlib.pyplot as plt

import cv2

预处理数据

#预处理数据

class PreprocessingData:

def __init__(self, path):

self.path = path

self.x_data = []

self.y_label = []

def processing(self):

list_path = os.listdir((self.path+"/images"))

for i in range(len(list_path)):

temp_data = cv2.imread(self.path+"/images" + '/' + list_path[i], cv2.IMREAD_COLOR)

temp_data = cv2.resize(temp_data, (128, 128))

grayimg = cv2.cvtColor(temp_data, cv2.COLOR_BGR2GRAY)

temp_data = grayimg.reshape(temp_data.shape[0], temp_data.shape[0], 1).astype(np.float32)

self.x_data.append(temp_data)

label_data = cv2.imread(self.path+"/labels" + '/' +list_path[i].split(".")[0] + ".png", cv2.IMREAD_COLOR)

label_data = cv2.resize(label_data, (128, 128))

label_data = cv2.cvtColor(label_data, cv2.COLOR_BGR2GRAY)

label_data = label_data.reshape(label_data.shape[0], label_data.shape[0], 1).astype(np.int64)

self.y_label.append(label_data)

return self.x_data, self.y_label

def read(self):

self.x_data, self.y_label = self.processing()

x_data = np.array(self.x_data)

y_label = np.array(self.y_label)

return x_data, y_label

#进行量子编码的线路

class QCNN_:

def __init__(self, image):

self.image = image

def encode_cir(self, qlist, pixels):

cir = pq.QCircuit()

for i, pix in enumerate(pixels):

theta = np.arctan(pix)

phi = np.arctan(pix**2)

cir.insert(pq.RY(qlist[i], theta))

cir.insert(pq.RZ(qlist[i], phi))

return cir

def entangle_cir(self, qlist):

k_size = len(qlist)

cir = pq.QCircuit()

for i in range(k_size):

ctr = i

ctred = i+1

if ctred == k_size:

ctred = 0

cir.insert(pq.CNOT(qlist[ctr], qlist[ctred]))

return cir

def qcnn_circuit(self, pixels):

k_size = len(pixels)

machine = pq.MPSQVM()

machine.init_qvm()

qlist = machine.qAlloc_many(k_size)

cir = pq.QProg()

cir.insert(self.encode_cir(qlist, np.array(pixels) * np.pi / 2))

cir.insert(self.entangle_cir(qlist))

result0 = machine.prob_run_list(cir, [qlist[0]], -1)

result1 = machine.prob_run_list(cir, [qlist[1]], -1)

result2 = machine.prob_run_list(cir, [qlist[2]], -1)

result3 = machine.prob_run_list(cir, [qlist[3]], -1)

result = [result0[-1]+result1[-1]+result2[-1]+result3[-1]]

machine.finalize()

return result

def quanconv_(image):

"""Convolves the input image with many applications of the same quantum circuit."""

out = np.zeros((64, 64, 1))

for j in range(0, 128, 2):

for k in range(0, 128, 2):

# Process a squared 2x2 region of the image with a quantum circuit

q_results = QCNN_(image).qcnn_circuit(

[

image[j, k, 0],

image[j, k + 1, 0],

image[j + 1, k, 0],

image[j + 1, k + 1, 0]

]

)

for c in range(1):

out[j // 2, k // 2, c] = q_results[c]

return out

def quantum_data_preprocessing(images):

quantum_images = []

for _, img in enumerate(images):

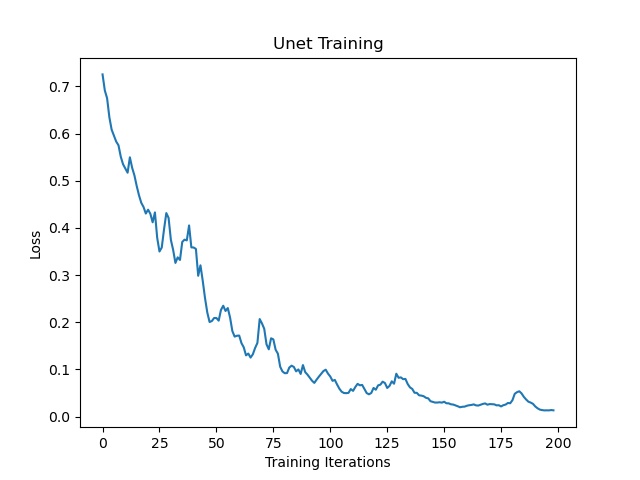

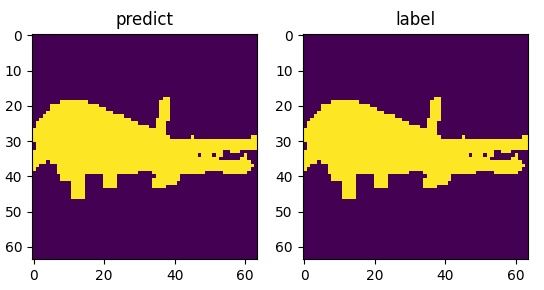

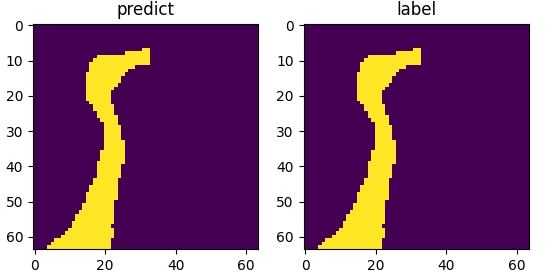

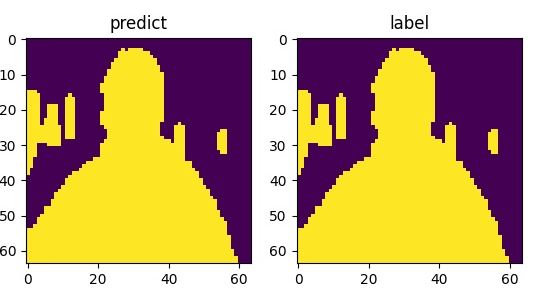

quantum_images.append(quanconv_(img))